Security Code Reviews: Master Your Secure Coding Strategy

A security code review is a deep dive into your application's source code, with one goal: find and fix security flaws before they ever make it to production. This isn't just about running a scanner; it's a critical process that pairs the speed of automated tools with the nuance of an expert human eye. Think of it as catching everything from simple configuration mistakes to the kind of complex business logic errors that only a person can spot. It's how you build software that's resilient from the inside out.

Why You Can't Afford to Skip Security Code Reviews

We live in an age of non-stop delivery. CI/CD pipelines are humming, and the pressure to ship features is relentless. But the old "move fast and break things" philosophy has a dangerous blind spot: security. The new mantra has to be "move fast and don't get breached."

Security code reviews have shifted from a painful, last-minute checkpoint to a continuous, integrated part of the development lifecycle. This isn't just a trend; it's a response to a harsh reality. Fixing a vulnerability after it's live in production is exponentially more expensive and damaging than catching it while the code is still in the IDE. Today's cloud-native apps and complex build pipelines introduce entirely new attack surfaces, from leaked secrets in a git commit to insecure open-source packages.

The Real-World Cost of Insecure Code

The numbers don't lie. Ignoring code security is a massive financial gamble. The average data breach now costs companies around USD 4.44 million, and the total economic damage from cybercrime is rocketing into the trillions. These aren't just abstract figures; they represent real-world consequences that make systematic security code reviews a business imperative, not just a technical nice-to-have.

In heavily regulated fields, the stakes are even higher. For instance, robust code review is a cornerstone of effective medical device risk management, where software flaws can have life-or-death implications.

By weaving security reviews directly into your daily development workflow, you transform the practice from a bottleneck into a competitive advantage. It’s the difference between bolting security on as an afterthought and building it in from the very first line of code.

The Pillars of a Modern Review Strategy

You can't just throw one tool at the problem and call it a day. A truly effective security code review program is a layered strategy, blending automation and human intelligence for a defense-in-depth approach. Here's a look at the essential components.

Modern Security Code Review Pillars

| Pillar | Primary Goal | Example Tools & Techniques |

|---|---|---|

| Static Application Security Testing (SAST) | Find known vulnerability patterns at scale within your own source code. | Automated scanners checking for things like SQL injection, XSS, and hardcoded secrets. |

| Software Composition Analysis (SCA) | Identify known vulnerabilities (CVEs) in third-party libraries and dependencies. | Tools that scan your package.json or pom.xml to flag risky open-source code. |

| Manual Peer Review | Uncover complex business logic flaws, authorization issues, and subtle design weaknesses. | A developer or security engineer manually inspects code for context-specific errors. |

| AI-Assisted Reviewers | Provide real-time, context-aware security feedback directly in the developer's IDE. | In-editor tools that offer instant suggestions, bridging the gap between SAST and manual review. |

Each pillar plays a unique role. SAST and SCA tools are fantastic for casting a wide net and establishing a security baseline, catching common and known issues quickly. But it’s the manual and AI-assisted reviews that bring the critical context, catching the kinds of unique, application-specific flaws that automated scanners simply can't see.

Building Your Security Review Framework

A great security code review process doesn't just happen. It's built, piece by piece, to fit how your team actually works. The idea is to create something that developers see as a safety net, not a roadblock. You're aiming for a repeatable system that makes your code stronger without bringing development to a screeching halt.

It all starts with getting crystal clear on what you're trying to accomplish. Your goals will shape everything that follows.

- Chasing Compliance? If you're working toward standards like PCI DSS, HIPAA, or SOC 2, your framework needs to be a machine for generating evidence. Every step has to map back to specific controls that auditors will be looking for.

- Focused on Vulnerability Reduction? Maybe your objective is more direct. You might aim to kill all OWASP Top 10 vulnerabilities in the codebase or slash critical findings from your SAST scans by 50% in the next three months.

- Taking a Risk-Based Approach? You could also concentrate your firepower on the crown jewels of your application—the authentication flows, payment processing modules, or any service that touches sensitive customer data.

When you know what "done" looks like, it's a lot easier to prioritize where to spend your energy.

Defining Roles and Responsibilities

One of the quickest ways for a security process to fail is ambiguity. When no one's sure who owns what, security becomes a vague problem that everyone assumes someone else is handling. You have to assign clear roles.

- Developers: They're the first line of defense. Their job is to write clean code, run automated scanners on their own machines, and fix the easy stuff before a pull request even gets opened. They're also the primary reviewers in peer-to-peer code checks.

- Security Champions: Think of these as developers who've been given extra security training because they have a real passion for it. They become the go-to security person on their team, helping triage findings and giving a first pass on security-sensitive code changes.

- Security Team/Engineers: This is your specialized unit for the most complex, high-risk reviews. They set the rules, manage the tools, and have the final say on critical vulnerabilities. They're also the ones who train the security champions.

This tiered system frees up your dedicated security pros to focus on the biggest threats while empowering developers to own security in their day-to-day work.

Creating a Pre-Review Readiness Checklist

Before a single human lays eyes on the code for a manual review, it needs to be ready. A pre-review checklist is a massive time-saver, ensuring all the basic checks are done so reviewers can hunt for deep-seated logic flaws instead of simple slip-ups. Automate this as much as you can.

A great framework empowers developers to find and fix issues themselves. The less a security engineer has to flag, the more successful your process is. The review itself should be a confirmation of quality, not the primary discovery phase.

Your checklist should make sure that:

- Automated Scans Have Passed: All your SAST, SCA, and secret scanning tools have run, and no new high-severity issues have popped up. This is non-negotiable. If you're not doing it already, learn how to implement comprehensive GitHub secret scanning as a baseline.

- Code is Self-Documented and Clean: The code should be easy to read, with clear comments explaining any tricky parts. The pull request description needs to be just as clear about what the change does and why.

- Unit and Integration Tests are Written: New features need new tests. If the tests don't pass, the code isn't ready for a security review because it's not even proven to work correctly yet.

- Dependencies Have Been Vetted: Any new third-party libraries have been run through an SCA tool to check for known vulnerabilities.

A solid security framework isn't just about internal checks, either. It should also include external validation like Penetration Testing as a Service (PTaaS), which brings in outside experts to simulate real-world attacks and see how your defenses hold up. By setting clear goals, defining roles, and using readiness checks, you build a system where security becomes a natural part of shipping code, not an afterthought.

Weaving in Automation and AI-Assisted Tooling

Let's be real: manually reviewing every single line of code in a modern development cycle is a recipe for disaster. It just doesn't scale. To do security code reviews right, you need a smart mix of automation and intelligent tooling. Think of it as a force multiplier for your team, handling the grunt work so your human experts can focus on the tricky, nuanced problems that automated systems always miss.

The idea is to bake security checks right into your CI/CD pipeline, making them a non-negotiable part of the process. This means tools like Static Application Security Testing (SAST) and Software Composition Analysis (SCA) should run on every single pull request. If a critical vulnerability pops up, the build fails. Simple as that. It's immediate, clear feedback for the developer who just pushed the code.

The Power of Automated Gates in Your Pipeline

Setting up these automated gates is a foundational step. A common workflow looks something like this: you configure your CI/CD platform—whether it's GitHub Actions or Jenkins—to kick off SAST and SCA scans automatically. If a scan flags a high-severity issue, like a potential SQL injection flaw or a dependency with a known critical vulnerability, the whole process stops. The pull request is blocked from being merged. Period.

This immediate feedback loop is everything. It shifts vulnerability discovery from a slow, manual review process to an instant, automated check. Developers learn what secure code looks like in real-time, right when they're working on the feature, which helps prevent the same mistakes from popping up again and again. More importantly, it establishes a security baseline that keeps the common, well-known vulnerabilities from ever making it into your main branch.

Automation isn’t about replacing human reviewers; it’s about amplifying their impact. By filtering out the low-hanging fruit, automated tools free up your security team to hunt for complex business logic flaws and architectural weaknesses.

Shifting Left with AI in the IDE

The next step in this evolution is to bring that security feedback even closer to the developer—directly into their Integrated Development Environment (IDE). This is where a new wave of AI-assisted tooling is making a real difference. Instead of waiting for a pipeline to fail ten minutes later, developers get security advice as they type.

Imagine a developer writing a function that talks to a database. An AI-powered tool like kluster.ai, running quietly in the background, can instantly flag that the code might be vulnerable to an injection attack and suggest a safer, parameterized query. This isn't just some generic warning; it's contextual feedback, tailored to the exact code being written at that moment.

This approach pays off in a few key ways:

- Immediate Correction: Issues are caught and fixed the second they're created, which is always the cheapest and fastest time to address them.

- Educational Value: Developers learn secure coding practices organically, as part of their day-to-day workflow, not through a boring, abstract training session they'll forget in a week.

- Reduced Friction: Security becomes a helpful collaborator inside the IDE, not some adversarial gatekeeper that shows up late to block their work.

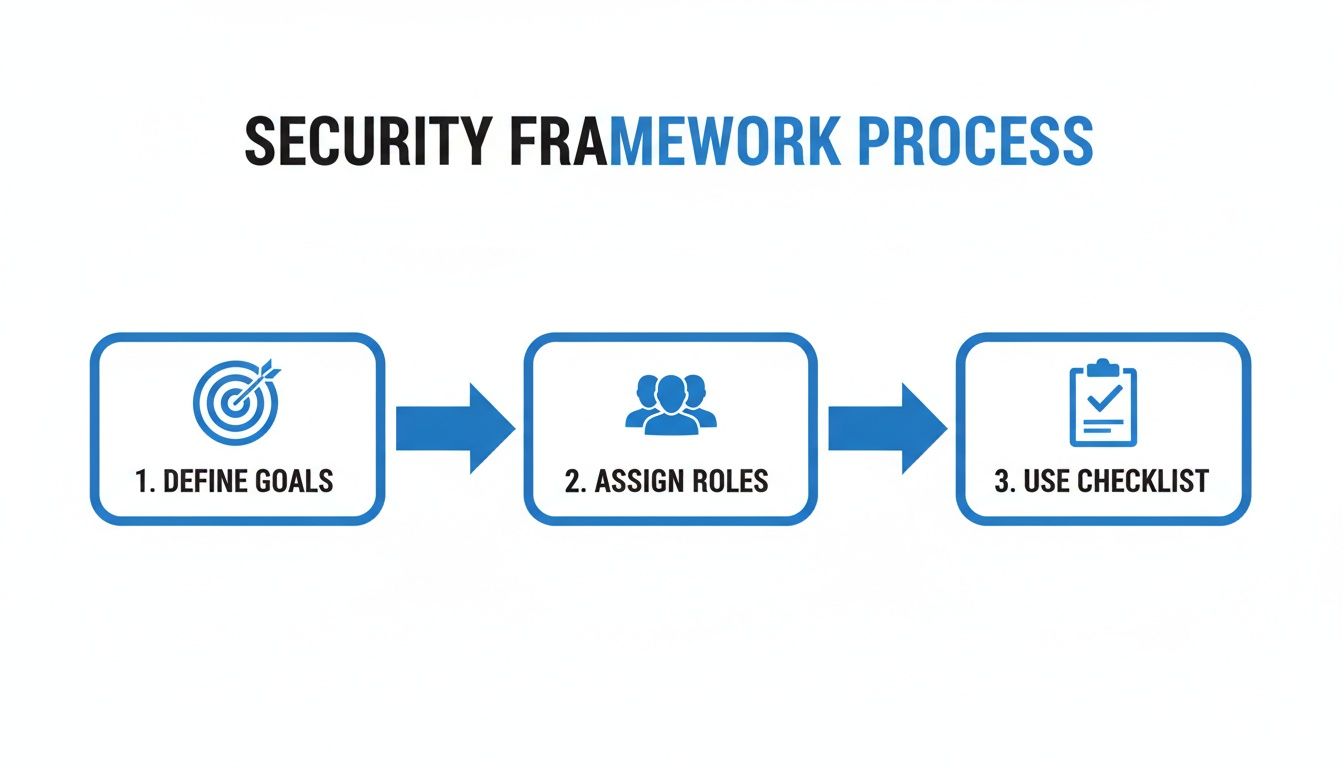

This simple flow shows how a solid security framework, supported by automation, should work.

As the visual suggests, successful automation depends on a clear process with defined goals and roles. This lets your tools effectively enforce the standards you've already set.

Tuning Your Tools for Maximum Impact

Just flipping the switch on a new tool isn't enough. You have to fine-tune it to your organization's reality. Out of the box, many SAST tools are notoriously noisy, flooding your team with false positives that lead to serious alert fatigue. If developers are constantly chasing ghosts, they’ll just start ignoring the tool’s output entirely.

The art of effective automation is all about customization. This means you need to:

- Suppress Irrelevant Findings: Actively triage and mark false positives so they don't keep showing up in every scan.

- Adjust Rule Severity: Tweak the severity levels of certain rules to match your company's actual risk appetite. A "medium" risk for one business might be "critical" for another.

- Create Custom Rules: Write your own rules to enforce company-specific coding standards or to check for vulnerabilities that are unique to your tech stack.

Hybrid solutions that blend human expertise with smart automation are quickly becoming the industry standard. This shift is driven by a persistent cybersecurity workforce gap—some estimates point to a global shortage of around 3.4 million professionals—which makes purely manual reviews impossible to scale. At the same time, frameworks like PCI DSS v4.0 are pushing for continuous compliance, forcing organizations to adopt automated solutions just to keep up.

When you invest time in tuning, you turn a generic scanner into a highly effective, low-noise security partner that your developers can actually trust. For a deeper look at making this happen, check out our guide on automating code review the right way. A well-tuned approach ensures that when an automated gate does block a pull request, everyone knows it's for a good reason that needs immediate attention.

Mastering the Manual Security Code Review

Automated tools are fantastic for catching the low-hanging fruit. They’ll flag known vulnerability patterns at scale and act as your first line of defense, but they have one massive blind spot: they don’t understand intent. This is where a skilled human reviewer becomes irreplaceable.

A person can spot the subtle, context-specific flaws that even the smartest scanner will sail right past. We’re talking about the kind of bugs that pop up when what the code does doesn't match what it was supposed to do. Think about a tricky authorization check or a multi-step financial transaction. Automated tools just see syntactically correct code, but a manual review uncovers the clever bugs buried in flawed business logic.

To pull this off, you absolutely have to learn to think like an attacker. Every line of code is an opportunity. You have to poke at assumptions, question edge cases, and constantly ask yourself, "How could I break this?"

Thinking Like An Attacker: A Checklist

A great manual review isn't just a random scroll through a file; it's a systematic hunt for weaknesses. Your goal is to focus on the areas where automated tools are the least effective, ensuring you spend your limited time on the highest-risk parts of the application.

A manual review isn't just about finding bugs; it's about validating the security assumptions made during development. You're the human element that connects code to real-world risk.

Here’s where I recommend focusing your efforts:

- Authentication and Session Management: How are credentials really being handled? Look for sessions that aren't properly invalidated after a logout or password change. Dig into password recovery flows or tokens that never seem to expire.

- Authorization and Access Control: This is a huge one. Scrutinize every single check that decides if

user Acan accessresource B. Can a regular user hit an admin endpoint just by tweaking a URL? Is there a risk of an Insecure Direct Object Reference (IDOR)? - Business Logic Flaws: These are my favorite finds. Can a user add a negative quantity of an item to their cart to get a refund? Or maybe complete a workflow out of order to bypass the payment step? Scanners are blind to this stuff.

- Complex Injection Vulnerabilities: While SAST is pretty good at spotting basic SQL injection, you need a human eye for second-order or blind injection flaws. These happen when user input gets stored and then used later in an unsafe way—a path that’s often too complex for a tool to trace.

- Cryptography and Secrets Management: Are you using outdated, weak algorithms? Even worse, are secrets like API keys or encryption keys hardcoded right in the source, even if they're lightly obfuscated?

This targeted approach ensures your manual review complements your automated tools instead of just re-doing their work. It’s all about adding maximum value.

From Vulnerable Code To Secure Fix

Spotting a vulnerability is only half the job. You also need to know how to fix it properly. Let’s walk through a super common authorization bypass vulnerability in a Node.js/Express app.

Here, an attacker could try to view an order that doesn't belong to them just by guessing the id in the URL.

Vulnerable Code Snippet: // An endpoint to fetch order details app.get('/api/orders/:id', (req, res) => { const orderId = req.params.id; // This code only checks if the order exists... const order = db.orders.findById(orderId); if (order) { res.json(order); } else { res.status(404).send('Order not found'); } }); The mistake is glaring once you look for it: the code validates that the order exists but never checks if the logged-in user actually owns it. This is a classic, and dangerous, access control failure.

Secure Code Fix: // The corrected, secure endpoint app.get('/api/orders/:id', (req, res) => { const orderId = req.params.id; const userId = req.session.userId; // Assuming user ID is in the session // ...but it never checks if the current user OWNS the order. const order = db.orders.findById(orderId); if (order && order.ownerId === userId) { res.json(order); } else { // Return a generic error to avoid leaking information res.status(404).send('Order not found'); } }); The fix is simple but critical. We added an authorization check to make sure the resource owner matches the person making the request. This is exactly the kind of contextual logic that manual reviews are perfect for finding.

Remember, how you deliver this feedback matters. Don't just point out the problem; explain the "why" behind it. Frame it as a teaching moment. Instead of saying, "This is insecure," try something like, "This pattern could lead to an IDOR vulnerability. Let's add a check here to ensure the user owns this resource." This collaborative approach builds a stronger security culture and makes it less likely you'll see the same mistake again.

Measuring Success and Overcoming Common Pitfalls

So you’ve rolled out a security code review process. That's a huge win, but the work isn't over. How do you actually know if it’s making a difference? Without a way to measure success, you’re just guessing. To prove the value of your efforts, you need to move beyond gut feelings and into hard data that shows a real impact on your company's risk.

After all, success isn't just about the number of bugs you squash. A truly effective program reduces risk over the long haul, helps developers think more securely on their own, and ultimately makes your entire codebase more resilient.

Key Performance Indicators That Matter

If you want to track how effective your code reviews are, you need to focus on the right Key Performance Indicators (KPIs). These numbers give you an honest, objective look at how the program is doing and help you make the case for continued investment.

Here are the essential metrics I always recommend keeping an eye on:

- Mean Time to Remediate (MTTR): How long, on average, does it take your team to fix a security flaw once it's been found? A dropping MTTR is a great sign that your process for identifying, triaging, and fixing vulnerabilities is getting smoother and more efficient.

- Vulnerability Recurrence Rate: Are you seeing the same old vulnerabilities—like XSS or IDOR—crop up in new code time and time again? A high recurrence rate is a major red flag. It usually means developers need better, more targeted training on specific security concepts.

- Vulnerability Density: This is a simple but powerful metric: the number of vulnerabilities found per thousand lines of code. As your security program matures, you should see this number consistently go down, proving that developers are writing safer code from the start.

- Critical Vulnerabilities in Production: This one is the ultimate gut check. The whole point of code review is to stop serious flaws from ever reaching your users. Tracking the number of critical or high-severity vulnerabilities discovered after a release is a direct measure of your program's real-world impact.

The goal of a security code review program isn't just to find vulnerabilities; it's to stop creating them in the first place. The best metrics show a cultural shift toward proactive security, not just reactive bug-squashing.

Navigating Common Roadblocks

Even the most well-thought-out program can stumble if you don't plan for the human element. Security code reviews live at the messy intersection of people and technology, and that can easily create friction.

Preventing Reviewer Burnout

One of the quickest ways I've seen a review program die is by burning out the experts. When you have a tiny handful of security champions or senior engineers reviewing every single piece of security-sensitive code, they become an instant bottleneck and get completely overwhelmed.

The Fix: Set up a tiered review system. Empower all your developers to handle basic peer reviews for low-risk changes. Use your trained security champions for the more complex features, and save your dedicated security engineers for the really high-stakes stuff, like authentication services or payment processing gateways. This approach spreads the workload and actually lets you scale.

Easing Friction Between Developers and Security

Let’s be honest: developers want to ship cool features, and security wants to prevent breaches. Sometimes, those goals can feel like they’re in direct opposition, creating an "us vs. them" vibe. That tension slows everything down and kills the collaborative spirit you need for security to actually work.

The Fix: Frame security feedback as a chance to mentor, not to criticize. Give clear, actionable advice on how to fix a problem and, more importantly, explain the "why" behind it. Integrating AI-assisted tools like kluster.ai right into the IDE also makes a massive difference here. It provides instant, private feedback, letting developers fix issues before a pull request is ever opened, avoiding public call-outs.

Taming the Flood of False Positives

Automated SAST tools are fantastic for covering a lot of ground quickly, but they can be incredibly noisy. When developers get bombarded with a constant stream of false positives, they start ignoring all the alerts—including the real ones. This "alert fatigue" can render your automation almost useless.

The Fix: You have to invest time in tuning your tools. Get in there and actively triage alerts, suppressing the ones you know are false positives. Customize the rules to fit your specific tech stack and risk tolerance. When you cut down the noise, you ensure that when an automated tool does flag an issue, your team takes it seriously every single time.

Frequently Asked Questions About Security Code Reviews

Even with a solid plan, a few common questions always pop up when teams get serious about security code reviews. Getting the answers right is the difference between a program that developers actually use and one that just collects dust.

Let's cut through the noise and tackle some of the most common hurdles with straightforward, practical advice.

How Do We Get Developer Buy In For Security Code Reviews?

This is it. This is the single most important part of the entire process. If developers see security reviews as just another bureaucratic gate slowing them down, they’ll fight you every step of the way. You have to reframe it.

This isn't about the security team throwing a checklist over the wall. It's about a shared responsibility for building high-quality, resilient software.

One of the best ways to make this happen is to start a security champions program. Find the developers who are already passionate about security, give them some extra training, and empower them to be the go-to security advisors on their teams. They become the bridge between security and engineering, building trust and a culture of collaboration from the inside out.

And please, give them tools that make their lives easier, not harder. An AI-assisted reviewer that flags issues right inside their IDE gives them instant, private feedback. They can learn and fix things long before a pull request is ever opened. That’s how you turn security from a roadblock into a helpful guide.

What Is The Difference Between SAST, DAST, and SCA?

You'll hear these acronyms thrown around a lot, but they aren't interchangeable. They're distinct, complementary layers of automated security testing. A mature program uses all three to cover different blind spots.

Here's the simple way to think about it:

- SAST (Static Application Security Testing): This is your "white-box" tester. It scans your source code from the inside out before the application is even running. It’s looking for known vulnerability patterns, like SQL injection or buffer overflows, right in the code itself.

- DAST (Dynamic Application Security Testing): This is your "black-box" tester. It attacks your live, running application from the outside, just like a real hacker would. It has no idea what the code looks like; it's just probing for weaknesses.

- SCA (Software Composition Analysis): Think of this as the librarian for your open-source dependencies. It scans all the third-party libraries and components you're using and checks them against a massive database of known vulnerabilities (CVEs). It makes sure you aren't accidentally inheriting someone else's security problems.

The real magic is how they work together. SAST finds flaws in the code you wrote, SCA secures your supply chain, and DAST validates that everything is secure once it's all running in a real environment.

How Much Time Should A Security Code Review Take?

There is no magic number. Anyone who gives you one is selling something.

The time a proper security review takes depends entirely on the change's complexity and potential risk. A one-line bug fix in a non-critical UI component might only need 15 minutes of scrutiny. On the other hand, a brand-new authentication service could demand several hours of deep, focused review from multiple experts.

The only sane approach is a risk-based one. Prioritize your review time based on the potential impact of a flaw.

Changes to things like authentication, authorization, payment processing, or any code touching sensitive PII should always get the most attention. This ensures your limited time is spent where it will have the biggest security impact, which prevents reviewer burnout and keeps the development pipeline moving.

The growing importance of this field is clear from market trends. The combined market for secure code review platforms and services is already valued in the billions, with analysts projecting a compound annual growth rate of around 15% for the coming years. This growth reflects a major shift, as systematic security code reviews move from a niche practice to a core enterprise priority, driven by automation, regulatory pressure, and the need for scale. Discover more insights about the secure code review market growth on datainsightsmarket.com.

At kluster.ai, we're building the future of code review by putting an AI security expert directly in your IDE. Our tool provides instant, context-aware feedback on AI-generated code, catching security flaws, logic errors, and compliance issues before they ever leave the editor. This allows your team to enforce security policies automatically and slash review times, ensuring every pull request is production-ready from the start.

Book a demo with kluster.ai today and see how you can merge faster with confidence.