The Ultimate Guide to Automated Code Reviews

Automated code reviews are exactly what they sound like: using software to scan source code for bugs, security holes, and style issues, all without a human having to lift a finger. Think of it as an instant quality check that runs before a human reviewer even lays eyes on the code. It's the first line of defense, catching all the common, easy-to-spot problems automatically so your team can focus on what really matters.

Why Manual Code Reviews Are Reaching a Breaking Point

Imagine a high-speed assembly line cranking out products faster than ever before. Now, picture one person at the very end, trying to manually inspect every single item. It’s an impossible task, right? That’s the reality for a lot of software teams today. The manual code review process has become a massive bottleneck.

The rise of AI coding assistants has put code generation on steroids, but our human ability to review all that new code hasn't changed. We're still just people. This growing gap is creating a cascade of problems that slows everyone down and quietly introduces risk.

The Soaring Volume of AI-Generated Code

The root of the problem is just simple math. By 2025, an estimated 84% of developers will be using AI tools, which are already cranking out a staggering 41% of all new code. Let that sink in. For a team of 250 developers, that could mean nearly 65,000 pull requests a year, eating up over 21,000 hours of review time.

The data shows a reviewer's focus craters after just 80-100 lines of code, and it can take up to 14 different people to confidently catch a security flaw in a manual review. This explosion in code output is causing some serious pain.

- Endless Pull Request Queues: PRs just stack up, sometimes waiting days for a review. This blocks feature releases and creates a nightmare of merge conflicts down the line.

- Reviewer Burnout and Fatigue: Your most senior developers, the ones who should be thinking about architecture, are stuck checking for syntax errors and style guide violations. It's a huge waste of their expertise.

- Inconsistent Quality Standards: When people are rushed, quality becomes a lottery. One reviewer might spot a critical bug that another misses completely, leading to a messy and unpredictable codebase.

- Constant Context Switching: Developers get pulled out of deep, focused work to review someone else's code, completely derailing their own productivity.

Manual code review was not designed for the age of AI-assisted development. It’s a system straining under a load it was never meant to carry, turning a quality gate into a productivity dam.

The Inevitable Breaking Point

When your manual processes are eating up this much time and brainpower, you eventually hit a wall. And this isn’t just an engineering headache; it’s a business problem. Slower releases, more bugs in production, and frustrated, burnt-out developers all hit the bottom line.

Across all kinds of industries, smart organizations are figuring this out by using automation to reduce repetitive staff tasks and letting their experts focus on the hard problems.

Automated code reviews aren't a "nice-to-have" anymore. They're a necessary adaptation to keep up with the pace of modern development, making sure that moving fast doesn't mean sacrificing stability or security.

Understanding Different Automated Code Review Tools

Automated code reviews aren't a single technology you just switch on. It's more like building a specialized team of inspectors for your codebase, where each member brings a unique skill set to the table. Getting this right means understanding their distinct roles so you can build a quality and security process that actually works for your team.

You've got the foundational layer with traditional static analysis, which acts as a basic rule-checker. Then you have AI-powered tools that bring deeper, more contextual insights. And finally, you have real-time feedback that pushes the entire process directly into the developer's editor, catching issues the moment they're typed. Each layer builds on the last, creating a rock-solid safety net for your code.

Traditional Static Analysis The Rule Follower

Static Application Security Testing (SAST) tools, or "linters" as most of us call them, are the diligent rule-followers on your inspection team. Think of them as a grammar checker for your code. They meticulously scan every line to find violations against a predefined set of rules.

These tools are fantastic at enforcing consistency and catching known anti-patterns.

- Code Style Enforcement: They make sure everyone follows the same formatting, naming conventions, and syntax rules. No more pointless debates in pull requests over tabs versus spaces.

- Known Vulnerability Detection: They can spot common security flaws, like using a deprecated crypto function or leaving the door open to basic injection attacks.

- Bug Pattern Recognition: They identify patterns that often lead to bugs down the road, such as potential null pointer exceptions or accidental infinite loops.

But while they're great for maintaining a clean and predictable codebase, their logic is rigid. A linter has no idea what the intent behind the code is; it only knows if a rule was broken. This is where they fall short—they can't catch complex logical errors or new bugs that don't fit a known pattern.

AI-Powered Reviews The Seasoned Expert

If static analysis is the grammar checker, think of AI-powered review as the experienced editor who reads your entire story to find plot holes and logical disconnects. These advanced tools go way beyond simple rules to understand the context and purpose of the code.

By analyzing the entire codebase, developer comments, and even the original prompt given to an AI assistant, these systems provide much deeper feedback. They can spot complex logical flaws, suggest performance optimizations, and even flag subtle security risks a rule-based system would completely miss. To really get a handle on this, it's worth exploring the principles of AI automation that give these tools their reasoning power.

A 2025 report drives this point home: 53% of developers using AI tools want better contextual understanding from their review systems, a gap that traditional static analysis just can't fill.

This ability to grasp a developer's intent is what truly sets AI reviews apart. They stop being simple checkers and start acting like genuine collaborators in the development process. For a closer look at the different platforms, check out our guide on the top automated code review tools that use these AI capabilities.

Real-Time In-IDE Feedback The Instant Coach

The final piece of the puzzle is all about timing. It's about delivering feedback at the perfect moment—right inside the Integrated Development Environment (IDE) while the developer is actively writing code. This "shift-left" approach is like having a coach looking over your shoulder, offering instant guidance.

Instead of waiting for a CI/CD pipeline to fail or for a pull request review to finally kick off, developers get feedback in seconds. This real-time loop is incredibly powerful. It stops errors from ever being committed, gets rid of the constant context switching, and speeds up learning by connecting a mistake directly to its source. Platforms like kluster.ai are built for this, specializing in instant, in-IDE verification that makes sure code is solid before it ever leaves the editor.

To help you decide what's right for your team, let's break down how these three approaches stack up against each other. Each has its place, and the best solution often involves a mix of all three.

Comparing Automated Code Review Approaches

| Review Type | Primary Function | Best For | Limitations |

|---|---|---|---|

| Static Analysis | Enforces predefined rules, styles, and known patterns. | Maintaining code consistency, catching common bugs, and enforcing style guides across a team. | Lacks context, cannot detect logical flaws or novel vulnerabilities, can be "noisy" with false positives. |

| AI-Powered Review | Understands code context, developer intent, and complex logic. | Finding deep logical errors, security vulnerabilities, and performance issues that rules miss. | Can be more resource-intensive, quality varies greatly between tools, often operates post-commit. |

| Real-Time In-IDE | Provides instant feedback on code as it is being written. | Preventing errors from being committed, accelerating developer learning, and reducing rework cycles. | Relies on tight IDE integration, effectiveness depends on the speed and accuracy of the underlying analysis engine. |

Ultimately, the goal isn't just to find bugs faster but to build a workflow where fewer bugs are created in the first place. By combining the baseline consistency of static analysis, the deep insights of AI, and the immediate feedback of in-IDE tools, you create a development environment where quality is built-in, not bolted on.

Shifting Left with In-IDE vs CI Pipeline Reviews

Deciding where automated code reviews happen is just as important as deciding what they check. The timing of the feedback can completely change its impact, cost, and effectiveness. This brings us to a huge concept in modern software development: shifting left. It’s all about moving quality checks earlier in the process, and it’s the core difference between the two main ways to automate reviews.

On one hand, you have reviews that happen late in the game, during the Continuous Integration (CI) pipeline. On the other, you have reviews that happen instantly, right inside the developer's Integrated Development Environment (IDE).

To get the difference, think about writing a research paper. A CI pipeline review is like handing your finished draft to an editor. They'll find typos, grammar mistakes, and logical gaps. That's a crucial quality check, but it happens after you’ve already done all the work. Now you have to go back, reopen the document, find the issues, fix them, and resubmit. It's a slow, painful loop.

An in-IDE review, however, is like using a real-time grammar and spell checker while you type. It flags a typo the second you make it. It suggests a better word on the spot. You fix it instantly, without breaking your flow, and the mistake never even makes it into the first draft. That immediate feedback is the whole point of shifting left.

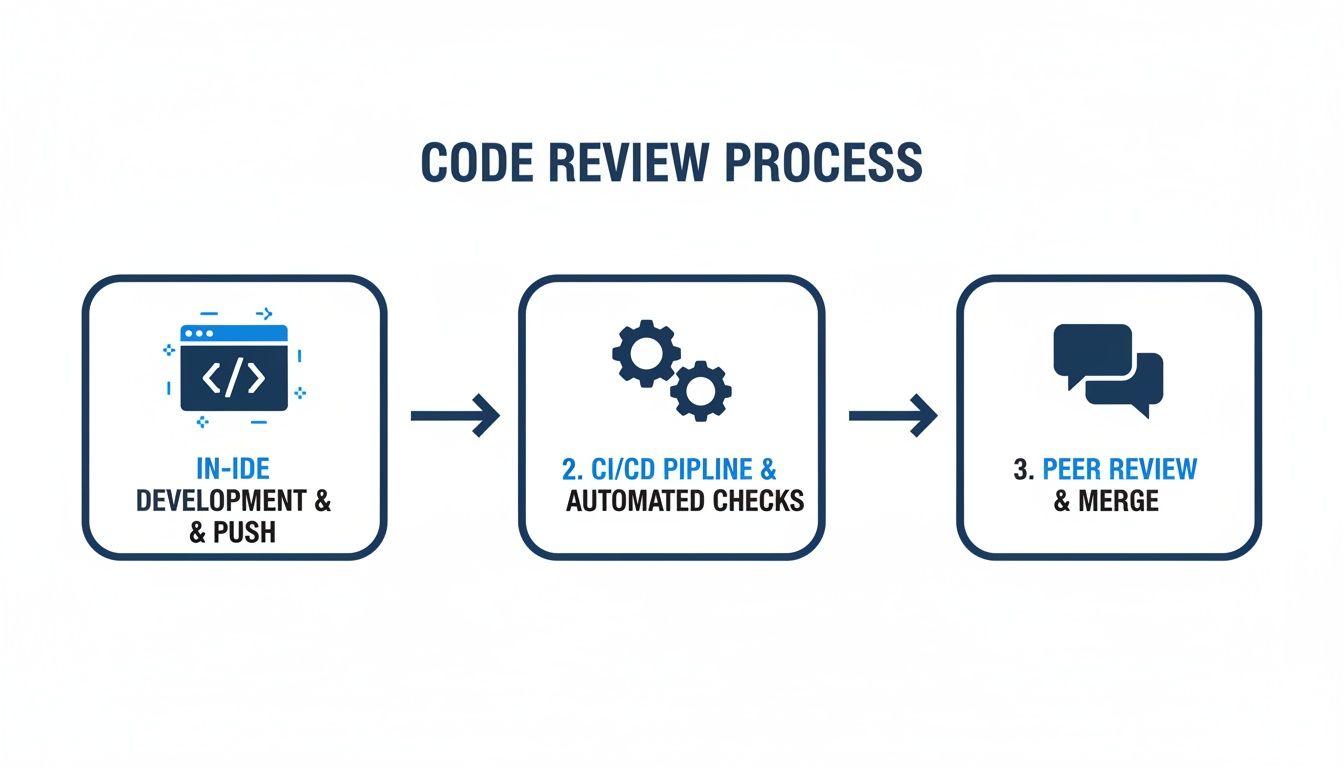

The diagram below shows this flow, with in-IDE checks happening long before the more traditional CI/CD pipeline reviews kick in.

As you can see, in-IDE feedback is part of the creative process, while CI pipeline checks act as a gatekeeper after the work is supposedly "done."

The Power of Instant Feedback in the IDE

Getting feedback directly in the IDE is a game-changer for developer productivity. When a review happens later in a CI pipeline, the developer has probably already moved on to something else. To fix the problem, they have to stop what they're doing, reload the old mental context, find the code, fix it, and push it all over again.

This context switching is a notorious productivity killer. It shatters focus and throws sand in the gears of the workflow. In-IDE tools get rid of this problem completely.

The core benefit of in-IDE automated code reviews is the elimination of the feedback delay. By catching issues in seconds, not hours, it prevents context switching, reduces rework, and transforms the review process from a roadblock into a real-time learning experience.

Real-time feedback just offers things that CI pipeline checks can't, simply because of their timing.

- Reduced PR Churn: Issues get fixed before a pull request is even created. This stops the frustrating back-and-forth of "PR ping-pong," where a request is rejected and resubmitted over and over for small mistakes.

- Accelerated Learning: Developers see the consequences of their code immediately. This tight feedback loop reinforces best practices and helps engineers, especially junior ones, learn the codebase and company standards much faster.

- Lower Remediation Costs: A bug found and fixed in the editor costs pennies. That same bug, if it makes it through the CI/CD pipeline and into production, can cost thousands to identify, patch, and redeploy.

CI Pipeline Reviews Still Have a Crucial Role

This doesn't mean CI pipeline reviews are obsolete—far from it. They serve as an essential final checkpoint. While in-IDE tools are perfect for immediate, line-by-line feedback, CI-based tools are better for slower, more comprehensive analyses that might be too resource-heavy to run in real time.

Think of it as a layered defense strategy:

- In-IDE Reviews (The First Line): Catch the vast majority of errors, style issues, and simple bugs as they are written. This is where tools like kluster.ai provide immense value by verifying AI-generated code instantly.

- CI Pipeline Reviews (The Final Gate): Run more extensive checks, like integration tests or deep security scans, that ensure the entire build is stable before it moves toward production.

By combining both, you get a much more robust quality process. The in-IDE tools handle the high-volume, immediate feedback, freeing up the CI pipeline to focus on its role as the ultimate gatekeeper. To go deeper, learn more about how AI-powered IDEs are making this real-time coaching model a reality for development teams. This proactive approach ensures cleaner code enters the pipeline, making the entire development lifecycle faster, cheaper, and more efficient.

How to Implement Automated Code Reviews in Your Workflow

Getting started with automated code reviews isn't like flipping a switch. It's more of a thoughtful rollout. When you get it right, it feels like every developer just got a super-helpful assistant. But get it wrong, and it’s like installing an annoying gatekeeper that just slows everyone down.

A good implementation starts small and builds momentum. You want to introduce automation in a way that actually solves problems without creating new ones. The idea is to move from a small-scale experiment to an organization-wide standard, proving the value every step of the way.

Start with a Pilot Program

Don't even think about deploying a new tool to your entire engineering org at once. That's a recipe for chaos. Instead, pick a single pilot team to be your testbed. They’ll help you fine-tune the system and iron out all the kinks before you go big.

Look for a team that’s open to new tools and is struggling with problems automation can fix, like painfully long review cycles or inconsistent code quality. This pilot phase is your chance to get real-world feedback and see how the tool really fits into your workflow.

- Define Clear Goals: What are you actually trying to solve? Maybe you want to slash PR review times by 25%, kill all the pointless style arguments, or enforce a new security standard.

- Keep the Scope Small: Focus on just one or two key repositories. This makes it way easier to configure rules, measure the impact, and get specific feedback from the team.

- Collect Both Kinds of Data: Track the hard numbers—review time, bug rates—but also just talk to the developers. Is the tool actually helpful? Is the feedback clear and actionable, or is it just noise?

Integrate Seamlessly into Existing Workflows

If you want developers to actually use a new tool, it has to feel like a natural part of their day. Forcing them to jump over to a separate dashboard or learn some clunky new interface is a guaranteed way to make them hate it. The trick is to meet them where they already work.

That means deep integration with the tools they live in every day—their IDE, their Git provider, and the CI/CD pipeline—is non-negotiable. This is how you deliver feedback at the right time and in the right place without yanking them out of the zone.

The best automated reviews happen right inside the developer's editor. You get instant feedback that stops errors before a pull request even exists. This "shift-left" approach kills context switching and makes the feedback loop ridiculously short.

Modern tools are taking this even further. For instance, platforms like kluster.ai provide in-IDE, five-second feedback from intent-aware agents. They check your code against prompts, project history, and docs—catching errors, regressions, and security holes on the fly. By enforcing standards across editors like VS Code and Cursor, it helps startups cut merge times in half and lets big companies govern AI-generated code at scale. The research on developer experiences with AI tools makes it clear why this kind of immediate feedback is a game-changer.

Configure and Customize Your Ruleset

A one-size-fits-all ruleset is a fantasy. Every company has its own coding standards, architectural quirks, and security policies. A successful rollout hinges on tailoring the tool to reflect what your team actually considers best practice.

Start by turning off all the noisy, low-value rules. Nothing kills adoption faster than "alert fatigue" from a tool that just annoys people. Focus on the rules that tackle your biggest headaches first.

- Enforce Core Style Guides: Get the basics locked down first. Start with rules for formatting, naming conventions, and code structure to create a consistent baseline for everyone.

- Add Security and Performance Checks: Once the basics are in place, gradually layer in rules that scan for common security flaws (like the OWASP Top 10) and performance anti-patterns.

- Develop Custom Rules: As your team gets comfortable, you can start building custom rules that enforce your unique architectural decisions, making sure new code doesn't stray from established patterns.

By building up your ruleset step-by-step, you can raise your standards without overwhelming the team. Before you know it, automated reviews will be a powerful and trusted part of your workflow.

Measuring the ROI of Your Automated Review Process

Bringing in any new tool means you've got to justify it, and an automated code review platform is no exception. How do you actually prove it's making a difference? To measure the return on investment (ROI), you have to look past simple vanity metrics and dial in on tangible KPIs that connect directly to what the business cares about: speed, quality, and security.

Think of it like upgrading the engine in a race car. You wouldn't just say it "feels faster." You'd measure lap times, fuel efficiency, and how it holds up under pressure. The same logic applies here. You need a dashboard of core metrics to show exactly how automation is improving your entire development lifecycle.

Key Performance Indicators to Track

If you want to build a compelling case, you need to focus on the numbers that engineering leaders and stakeholders actually care about. These KPIs tell a clear story about how much time you're saving, how much risk you're cutting, and how much better your code is getting. A solid dashboard should put these five indicators front and center.

-

Pull Request Cycle Time: This is the big one. It's the total time from the moment a pull request is opened until it's merged into the main branch. Long cycle times are a classic sign that your review process is a bottleneck. When this number drops, it's a clear signal that automated code reviews are handling the small stuff, freeing up your senior devs to give a quick thumbs-up.

-

Code Churn Rate: This metric tracks how much code gets rewritten after the first review. A high churn rate usually means requirements were fuzzy or that reviewers are catching a ton of mistakes late in the game. Good in-IDE automation should crush this number by helping developers write better code from the start.

A core goal of automation isn't just to find flaws, but to prevent them from being committed in the first place. When Code Churn Rate drops, it's a strong signal that your "shift-left" strategy is working and developers are wasting less time on rework.

-

Bugs Found in Production: This is the ultimate report card for code quality. The whole point of a review process is to stop defects from ever reaching your users. If you can show a steady downward trend in production bugs, you have powerful proof that your automated checks are catching critical issues before they do any damage.

-

Developer Onboarding Time: How fast can a new hire start shipping meaningful, high-quality code? Automated tools that enforce your team's standards and give instant feedback act like a personal coach. They help new engineers get up to speed on your codebase and best practices in a fraction of the time. A shorter onboarding period is a direct and immediate return on investment.

-

Security Vulnerabilities Detected Pre-Commit: For anyone serious about DevSecOps, this metric is gold. By tracking the number of potential security holes caught and fixed before the code even hits the repository, you can clearly demonstrate a massive reduction in your organization's risk profile.

Creating Your ROI Dashboard

Putting together a simple dashboard to visualize these metrics is surprisingly straightforward. Most version control systems like GitHub or GitLab offer APIs that let you pull this data. You can feed it into your existing internal dashboards or BI tools to track these KPIs over time, showing a clear "before and after" picture.

By focusing on these outcomes, you change the conversation from "this tool is helpful" to "this tool saved us X hours in review time and prevented Y critical bugs from reaching production." That's the kind of data-driven argument that not only justifies the initial cost but also builds a rock-solid case for expanding automation across the entire engineering org.

Common Pitfalls of Automation and How to Avoid Them

Bringing automated code reviews into your workflow can be a game-changer, but it’s no silver bullet. Like any powerful tool, if you don't handle it right, you can create more problems than you solve. Nailing the rollout is the difference between an assistant that speeds everyone up and a noisy distraction that gets muted.

The goal is to weave automation into your team's rhythm so seamlessly that it feels like a natural extension of their workflow. If you see the common traps coming, you can sidestep them and build a system that actually helps people ship better code, faster.

The Problem of Alert Fatigue

The fastest way to get developers to ignore your shiny new tool is to drown them in notifications. This is "alert fatigue," and it happens when a tool is configured to be way too aggressive out of the box, flagging every minor style issue and trivial suggestion.

When every other line of code gets a warning, developers learn to tune it all out—the important stuff included. Pretty soon, even critical security alerts get lost in the noise.

To prevent this, you have to be deliberate with your ruleset:

- Start small. Turn on only the most critical rules first—things like major security vulnerabilities, serious performance anti-patterns, and the handful of style rules that cause the most arguments.

- Kill the nitpicks. Disable purely stylistic or opinion-based rules at the beginning. You can always add them back later, once the team sees the tool is genuinely useful.

- Introduce new rules slowly. Don't just flip a switch. Add new checks one by one and explain why they matter to the team before you enable them.

Overcoming Developer Resistance

Let's be honest: developers can be skeptical of new tools, especially ones that feel like they're judging their work. Some will see an automated reviewer as a critique of their skills, a gatekeeper slowing them down, or just more corporate process getting in the way.

The trick is to frame the tool as a helpful assistant, not a robotic enforcer. It’s there to handle the boring, repetitive checks so they can focus on the interesting problems. It helps them catch simple mistakes before anyone else sees them, cuts down on the back-and-forth in PRs, and even helps them learn the codebase. When people see it as a tool that makes their lives easier, they’ll actually want to use it.

Automation should augment human expertise, not replace it. The best systems handle the objective, repeatable checks, allowing human reviewers to focus on complex architectural decisions, business logic, and mentorship—tasks that require genuine understanding.

Balancing Automation with Human Insight

It's a huge mistake to think automation can completely replace a human reviewer. These tools are fantastic at spotting objective errors, but they have zero context for the big-picture stuff. Over-relying on automation can leave you with a codebase that's technically perfect but poorly designed and impossible to maintain.

This is even more true now that AI is writing so much of our code. Recent studies found that while AI coding tools give developers a perceived speed boost of 20-24%, they can actually slow down experienced engineers by 19% on complex tasks. It's no surprise that developers are worried, with 87% citing concerns over AI accuracy and 81% over security. You can read more about the surprising effects of AI coding tools on developer productivity.

The solution is a partnership. Let the automated code reviews do the first pass. They can handle the linting, security scanning, and consistency checks. This builds a clean foundation so that when a human reviewer steps in, they can skip the tedious stuff and focus on what really matters: Is the architecture right? Does the logic make sense for the business? Is this a good long-term solution?

A Few Common Questions About Automated Code Reviews

Even with a solid plan, jumping into automated code reviews always brings up a few questions. Let's tackle the most common ones we hear from engineering teams to clear things up before you get started.

Will Automated Code Reviews Replace Human Reviewers?

Not a chance. They’re designed to augment human reviewers, not replace them. Think of automated tools as a tireless assistant who handles the first pass on every single change. They’re brilliant at catching the objective stuff—style violations, security flaws, and simple logic errors—with perfect consistency.

This frees up your human reviewers to focus on what they do best: weighing in on architectural decisions, digging into complex business logic, and mentoring other developers. The goal is a partnership. Automation handles the repetitive checks so your team can have more meaningful, high-level conversations about the code's design and intent.

How Are AI-Powered Reviews Different from Traditional Linters?

Traditional linters and static analysis tools are like a grammar checker. They operate on a fixed set of rules, and they’re great at enforcing consistent syntax or flagging known anti-patterns. They know the rules of the language, but they don't understand the story you're trying to tell.

AI-powered reviews go way deeper. They can actually understand the developer's intent by looking at the code, comments, and the surrounding project context. This lets them catch nuanced issues like logical mistakes, performance regressions, and subtle bugs that a simple rule-based linter would never see. They act more like an experienced editor who not only catches typos but also points out when the plot doesn't make sense.

What’s the First Step to Getting Started?

The best way to begin is to start small with a pilot program. Pick a single team or a single project. But before you even look at tools, figure out your biggest pain point. Are review queues backing up? Is code style all over the place? Are you worried about security? Nailing this down helps you pick a tool that will deliver a win right away.

For teams already using AI to generate code, an in-IDE tool is the perfect place to start. It gives developers instant feedback right where they’re working. Run the pilot for a couple of weeks, get feedback from the team—both hard numbers and their gut feelings—and use that data to make the case for a bigger rollout.

A successful pilot proves the value of automated code reviews in your environment. It turns skeptics into advocates by showing developers how the right tool makes their daily work faster and easier, paving the way for a smooth, organization-wide adoption.

Can We Customize the Rules for Our Specific Standards?

Absolutely. In fact, you should demand it. Customization is what separates a genuinely useful tool from a noisy one. Modern platforms aren't one-size-fits-all; they are highly configurable systems built to adapt to your unique engineering culture.

You can and should set up rules that enforce your organization’s specific coding conventions, security policies, and compliance needs. This makes sure the automated feedback aligns perfectly with your team's established best practices. When you get it right, the tool stops feeling like an external enforcer and becomes a true extension of your own standards.

Ready to eliminate review bottlenecks and enforce standards in real time? kluster.ai provides instant, in-IDE feedback that verifies AI-generated code against your organization's unique rules before it ever leaves the editor. Start free or book a demo today to see how you can review 100% of AI code and cut merge times in half.