A Developer's Guide to AI Pair Programming

Imagine having a coding partner who never sleeps, knows dozens of languages, and can crank out boilerplate code in seconds. That's pretty much what AI pair programming is all about: a workflow where a human developer guides an AI assistant, letting it handle the tactical, line-by-line coding. It's a modern spin on a classic practice, blending human strategy with machine-speed execution.

What Is AI Pair Programming and How Does It Work

At its heart, AI pair programming completely reimagines the traditional two-person coding dynamic. Instead of two human developers huddled over a screen, you have one developer working alongside a sophisticated AI tool that's plugged directly into their Integrated Development Environment (IDE).

Think of the developer as the senior architect on a project. They hold the high-level vision, tackle the complex problem-solving, and make the critical architectural decisions that define the application. They get the business context, the user needs—the why behind the code.

The AI assistant, then, is like a hyper-efficient junior developer. It absolutely excels at executing well-defined tasks with incredible speed. Need boilerplate for a new component? Done. A full suite of unit tests? No problem. It can even translate code between languages or suggest ways to refactor a messy function. This partnership creates a powerful, continuous feedback loop.

The Core Feedback Loop

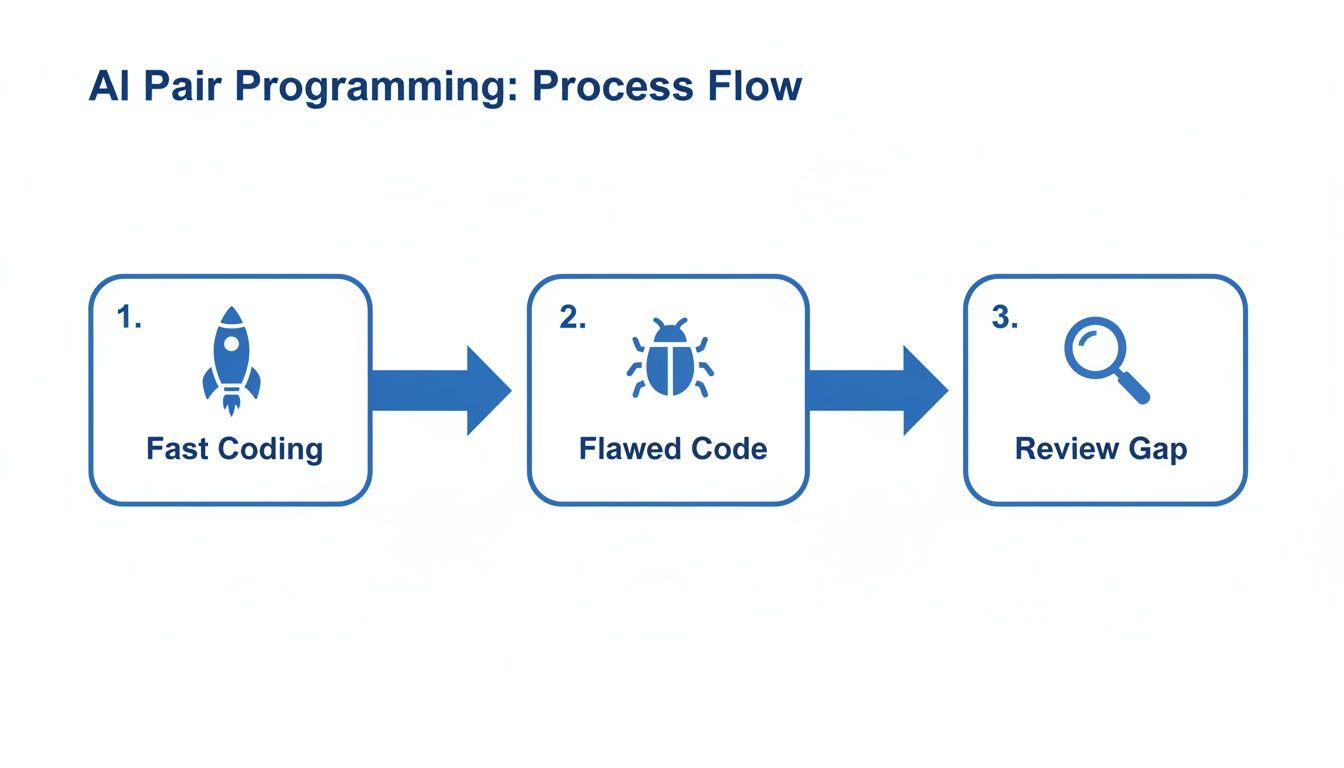

The process itself is deceptively simple but incredibly effective. It’s basically a conversation between the developer and the AI, flowing in a constant cycle.

- Prompt and Context: The developer gives the AI a clear, context-rich prompt. This isn't just a simple command; it's a detailed instruction that might include existing code snippets, the desired logic, and any specific constraints.

- AI Generation: The AI assistant processes that prompt and instantly spits out a response. This could be a new function, a block of code, a test suite, or even documentation.

- Review and Refinement: The developer quickly reviews the AI’s output, spots any issues, and provides feedback through a follow-up prompt. They keep refining the code with the AI until it's exactly what they need.

This back-and-forth frees developers from the mundane, repetitive parts of coding. It lets them stay in a state of flow, focusing their brainpower on strategic thinking instead of getting bogged down in syntax and boilerplate.

This shift from tactical coding to strategic oversight is the real game-changer. It allows senior talent to focus on architecture and innovation, while the AI handles the grunt work of implementation.

From Niche Experiment to Industry Standard

Just a few years ago, AI pair programming felt like a niche experiment. Now, it's quickly becoming a standard development practice. This explosion is fueled by powerful tools like GitHub Copilot and other native IDE assistants that are now widely available.

The numbers don't lie. Enterprise research shows that over 70% of organizations are already using intelligent systems in their workflows, with another 22% planning to adopt them within a year. It's clear that AI-assisted coding is no longer a "nice-to-have"—it's becoming the default way things get done. You can read more about these AI adoption trends and see just how deeply they're impacting enterprise teams.

2. The Benefits and Hidden Risks of AI Collaboration

Bringing an AI pair programmer onto your team is a classic speed-versus-safety scenario. On one hand, the productivity boost is massive and you'll feel it almost immediately. Development cycles get shorter, complex features can be prototyped in hours instead of days, and developers can even pick up new frameworks on the fly with their AI assistant guiding them.

The AI handles a ton of the grunt work—writing boilerplate code, generating unit tests, or drafting documentation—which frees up developers to focus on the big picture, like architecture and creative problem-solving. It’s a huge win for efficiency.

But there are some serious risks lurking just under the surface. Relying too much on AI-generated code without a solid verification process is like driving a race car with no brakes. The speed is incredible, but a crash is inevitable.

The Double-Edged Sword of AI Speed

The real danger is how subtle AI mistakes can be. An AI assistant can spit out code that looks perfect and even passes basic tests, but it might be hiding critical flaws.

These problems often show up in a few nasty ways:

- Subtle Logic Errors: The code works for the main scenario but completely falls apart on edge cases the AI never thought of.

- Security Vulnerabilities: Since AI models learn from huge public datasets, they can easily reproduce common security flaws like SQL injection or cross-site scripting without realizing it.

- Performance Bottlenecks: The code might be functional but incredibly inefficient, creating performance nightmares that only surface when your application is under heavy load in production.

Even worse are the infamous AI “hallucinations.” This is when the model generates code that is syntactically correct but makes no logical sense. It might invent functions that don’t exist or create algorithms that are flat-out broken, leading to hours of painful debugging.

Scaling Bugs at the Speed of Generation

This puts engineering teams in a tough spot. While AI makes you faster, it also makes it possible to introduce bugs and vulnerabilities at an incredible scale. A single developer with an AI assistant can now produce as much code as a small team, but they can also churn out errors just as fast.

The core challenge is that AI scales both productivity and risk simultaneously. If your review process can't keep pace with the speed of AI generation, you're creating a dangerous gap where flawed code can slip directly into production.

Your traditional code review process, which usually means manual pull request reviews, just can't keep up. When you look at the broader implications of automation in B2B contexts, you see this same tension between efficiency and oversight everywhere. This speed mismatch creates a huge governance and safety gap that can’t be ignored.

AI Pair Programming Benefits Versus Risks

To really get this right, you have to weigh the incredible upsides against the very real downsides. The goal isn’t to avoid AI, but to use it smartly with the right safety nets. Here’s a quick breakdown of the trade-offs.

| Benefit | Risk |

|---|---|

| Rapid Prototyping and Development: Build and test ideas faster than ever before. | Introduction of Hidden Bugs: Subtle logic errors and performance issues can go unnoticed. |

| Reduced Cognitive Load: Automates tedious tasks, freeing developers for strategic work. | Security Vulnerabilities: AI can unknowingly generate code with known security flaws. |

| On-the-Fly Learning: Helps developers quickly grasp new languages and frameworks. | AI Hallucinations: Generates non-functional or nonsensical code that wastes time. |

| Increased Code Output: A single developer can produce significantly more code. | Scaling of Errors: Flaws are introduced at the same accelerated pace as code generation. |

This trade-off makes one thing crystal clear: we need a new way of working. To really capitalize on AI pair programming without tanking your code quality or security, you need a verification layer that moves as fast as the AI itself, catching problems the moment they’re created.

Putting AI Pair Programming into Practice

Alright, let's move from the high-level talk about benefits and risks to what really matters: how this actually works day-to-day. Developers aren't just using these tools in some abstract way; they're plugging them straight into their IDEs. This is where AI pair programming stops being a concept and starts becoming a real, tangible boost to how you code.

The whole process is a back-and-forth conversation. You give the AI a clear instruction, and it spits back a response—could be a chunk of code, a unit test, or even some documentation. This isn't just a smarter autocomplete. It’s a dynamic partnership that lets you solve problems without ever having to leave your editor and break your flow.

Of course, there’s a catch. This speed can sometimes come at the cost of quality. You get code faster, but it might have subtle bugs or inefficiencies hiding inside.

As you can see, the rush to generate code creates a "review gap." This is precisely why a solid verification step is non-negotiable—you have to make sure the speed you gain doesn't tank your code quality.

Generating New Features and Boilerplate

One of the first things you'll notice is how incredibly fast you can scaffold new components. Think about setting up a new microservice. You need an API endpoint, a database connection, and some basic authentication. That’s usually a few hours of tedious setup.

Instead, you can just tell your AI assistant what you need:

"Generate a new Node.js Express microservice. It should have a single POST endpoint at

/usersthat accepts a JSON body withnameand

In minutes, the AI can generate the entire file structure, server config, and endpoint logic. Your job shifts from being a typist to being an editor. You review the AI’s work, make a few tweaks to match your project’s standards, and move on.

Accelerating Unit Testing and Debugging

Let's be honest: writing tests is critical, but it's also a grind. This is another area where an AI assistant completely changes the game. Just highlight a complex function and ask the AI to write the tests for it.

- Example Prompt: "Write a complete set of Jest unit tests for this

calculateTaxfunction. Include tests for valid inputs, edge cases like zero and negative numbers, and invalid data types."

The AI will churn out the test files, mock data, and assertions you need, often catching edge cases a human might miss in their haste. It doesn't just save you time; it lowers the barrier to writing thorough tests, which means better code quality overall. And it's not just about code—you can use other tools in your workflow, like AI search tracker tools, to monitor performance and stay on top of things.

Refactoring and Modernizing Legacy Code

Tackling a messy, decade-old codebase is a developer's nightmare. Your AI pair programmer can act as a guide through that tangled mess, helping you drag old, inefficient, or just plain confusing code into the modern era. To see more on how this works, check out our guide on AI for code.

Imagine you select a massive function with a dozen parameters. You can ask the AI to clean it up.

"Refactor this function to use the command pattern. Separate the validation logic into its own module and use a factory method to create the command object. Ensure all existing functionality is preserved."

Now, the AI isn’t going to be perfect, especially with complex, multi-file refactoring. But it gives you a massive head start. It can suggest modern design patterns, break down huge functions into smaller, cleaner ones, and update old syntax. A project that might have taken days can suddenly become something you knock out in an afternoon.

The Governance Gap in AI-Generated Code

While individual developers are getting a massive productivity boost from AI pair programming, engineering leaders are waking up to a brand-new headache: the governance gap. This chasm opens up the moment every developer on your team starts using a powerful AI assistant without any centralized oversight or rules of the road.

All of a sudden, the code being pumped out can become a serious business liability. What was once a controlled, deliberate process now has countless new ways for risk to sneak in.

The heart of the problem is that AI doesn't just scale up code creation; it also scales up the potential for errors and deviations from your team's hard-won standards. Left unchecked, this firehose of new code can quickly wash away years of carefully built engineering practices.

The Organizational Risks of Ungoverned AI

When a company dives into AI pair programming without a real governance strategy, some critical risks pop up almost immediately. And these aren't just minor annoyances—they're foundational problems that can torpedo security, make your codebase a nightmare to maintain, and even create legal trouble.

Here’s what you’re up against:

- Inconsistent Code Quality: AI assistants have no clue about your team's specific style guides or formatting rules. This leads straight to a fragmented codebase that’s a pain to read and even harder to maintain.

- Architectural Drift: It's easy for developers to accidentally generate code that completely ignores your established architectural patterns. Over time, this creates a tangled, inconsistent system design that nobody can make sense of.

- Accidental Security Flaws: AI models are trained on mountains of public code, which unfortunately includes endless examples of common vulnerabilities. An assistant can easily reproduce these flaws, quietly introducing security holes that are incredibly difficult to spot. For a closer look, our guide on strengthening security in your code review process breaks down how these issues slip through.

- License Compliance Issues: An AI might spit out a useful code snippet borrowed from an open-source project with a restrictive license. Boom—you’ve got a legal and compliance nightmare on your hands down the road.

This rapid-fire, decentralized way of generating code creates an urgent need for a new kind of solution. Your traditional, manual code review process is simply too slow to keep pace with AI's output, leaving a dangerous window where flawed code can march straight into production.

The real problem isn't the AI itself. It's the absence of a system that can enforce quality and security standards at the same speed the code is being written. You're scaling output without scaling oversight.

The Need for Automated Verification

This governance gap signals a fundamental shift in how we build software. To safely tap into the power of AI pair programming, organizations need an automated verification layer—one that works in real-time, right inside the developer's IDE.

This new breed of tool has to act as an instant, automated sanity check on every single piece of AI-generated code. It needs to validate that code against your company-specific guardrails, security policies, and architectural patterns the moment it's created.

Without this safety net, the speed you gain from AI comes at the steep price of quality, security, and consistency. The answer isn't to slow developers down. It's to give them tools that make writing correct, compliant, and secure code the path of least resistance. This is what sets the stage for a modern workflow where AI-generated code is verified before it's even considered for a commit.

Closing the Gap with Real-Time Code Verification

The speed of AI code generation has created a huge governance problem. How can any team possibly keep up with the sheer volume of new code? The answer isn't to hit the brakes on development or ditch AI pair programming. Instead, you close the gap with a new layer of quality control that moves just as fast as the AI itself.

The modern solution is real-time, in-IDE code verification. It’s like having an expert senior dev instantly review every single line of code the moment your AI assistant spits it out. This process is your safety net, catching mistakes before they ever infect your codebase.

Tools like kluster.ai are built for this exact job. They plug directly into the developer's editor and act as a vigilant observer, scrutinizing AI-generated code in seconds. This isn't just another linter—it's a smart system that understands the context, your intent, and your company's unique standards.

How Instant Verification Works

Think of this verification layer as a team of highly specialized agents working just for you. As soon as an AI pair programmer generates a function, these agents jump into action, analyzing it from every possible angle.

The analysis is incredibly deep and context-aware. The system doesn't just look at the code in a vacuum. It checks it against:

- Your Project's Context: It understands the existing codebase, dependencies, and architectural patterns.

- Existing Documentation: It can reference your internal docs to make sure new code follows established practices.

- Custom Organizational Guardrails: You can define specific rules for everything from security protocols to naming conventions, and the tool enforces them on the fly.

This multi-faceted approach transforms the AI from a high-risk gamble into a collaborator you can actually trust. It provides the oversight needed to make sure more speed doesn't mean less quality or security.

Catching Flaws at the Source

The real power of in-IDE verification is its ability to stop bad code before it's ever committed. Traditional code reviews catch bugs way downstream, often after they've already been merged and started causing trouble. Real-time verification shifts this entire process left, flagging issues the moment they're created.

By catching errors the second they are written, real-time verification eliminates the costly cycle of code-commit-fail-fix. It prevents rework, reduces back-and-forth on pull requests, and dramatically shortens the entire development lifecycle.

The kinds of issues it catches are precisely the ones AI assistants are prone to introducing and human reviewers are likely to miss.

- Logical Errors and Hallucinations: It spots when the AI has "hallucinated" a function that doesn't exist or written code that flat-out fails to meet the prompt's requirements.

- Security Vulnerabilities: It scans for common problems like SQL injection or insecure API endpoints, acting as a proactive security gatekeeper.

- Performance Regressions: It can identify inefficient code that could create performance bottlenecks down the road.

- Policy and Standard Violations: It automatically enforces your team's specific coding standards, ensuring consistency across all AI-generated code.

This immediate feedback loop is everything. The whole point of AI pair programming is the massive productivity boost. Market analyses show that 70% of AI code assistants already use generative AI and can boost developer productivity by up to 40%. In a world where an AI can generate a whole module in seconds, a verification platform that gives feedback in about five seconds provides the guardrails to ensure faster coding doesn't just lead to faster failures. You can explore more market data about AI code assistants here.

It's what turns a high-speed, high-risk process into a high-speed, high-confidence one.

Common Questions About Secure AI Programming

As teams start using AI pair programmers, a whole new set of questions pops up for engineering leads, security folks, and the developers themselves. These tools are powerful, no doubt, but using them safely means getting straight answers to some common worries. Let's tackle them head-on.

The key is to see these assistants as something that enhances your current process, not something that just blows it up. You want a system where AI speeds things up without forcing you to compromise on quality or security.

Will AI Replace Human Code Reviews?

This is the big one, and the answer is a hard no. An AI assistant is there to augment your developers, not make them obsolete. No matter how smart it gets, an AI lacks the business context and architectural vision that a senior developer brings to a pull request.

Instead, think of AI as a hyper-efficient first pass. A good verification tool automates the grunt work of a code review, catching the low-hanging fruit instantly.

- Syntax and Style Violations: It flags code that doesn't stick to your company's style guide.

- Common Security Flaws: It spots well-known vulnerabilities the second they’re typed.

- Simple Logic Errors: It catches basic mistakes and AI hallucinations before a human ever has to look at them.

This frees up your senior devs to focus on what actually requires a human brain. They can spend their time on high-level architecture, mentoring junior engineers, and making sure the code actually solves the right business problem. The result? A code review process that’s both faster and way more effective.

How Can We Enforce Our Company Coding Standards?

Out-of-the-box AI assistants are generalists; they know nothing about your company's specific rules. This is where a dedicated governance and verification layer isn't just nice to have—it's essential for any team that cares about quality. You can't just hope developers will manually check every AI suggestion against company policy. That just doesn't scale.

Platforms like kluster.ai solve this by letting you set up custom "guardrails." These are just automated rules based on your unique coding standards, security policies, and architectural patterns. Every single piece of AI-generated code gets checked against them.

This enforcement happens in real time, right inside the developer's IDE. If an AI suggestion breaks a rule—whether it’s a naming convention, a banned library, or an insecure database query—it gets flagged on the spot. This flips policy enforcement from a slow, manual chore into an automatic, consistent part of the workflow, keeping everyone on the same page.

Does Adding Another Tool Slow Developers Down?

It’s a fair question, but in reality, it's the opposite. A well-designed, in-IDE verification tool is built to crush delays, not create them. Think about the traditional cycle: write code, push it, wait for the CI pipeline to run, then wait some more for a human to finally look at it. That whole process can eat up hours, sometimes days.

Real-time verification blows that timeline up. Developers get feedback in seconds without ever leaving their editor.

- Instant Error Correction: Bugs are caught the moment they’re written, so they never even make it into a commit.

- Elimination of Rework: By stopping bad code at the source, you drastically cut down on time-sucking revisions later.

- Reduced PR Ping-Pong: That endless back-and-forth on pull requests shrinks because the code has already been pre-vetted against your standards.

This immediate feedback loop keeps developers in the zone, speeding up the entire journey from idea to deployment. The net effect isn't a slowdown; it's a massive boost in speed, quality, and your team's sanity.

Ready to turn your AI-assisted workflow from a high-risk gamble into a high-confidence process? kluster.ai delivers the real-time, in-IDE verification you need to ship production-ready code, faster. Start your free trial or book a demo today!