Mastering Software Peer Review for Quality Code

Software peer review is when developers systematically check each other's code for mistakes. Think of it as a pre-flight checklist for your software; it's a critical quality check designed to catch bugs, security flaws, and design issues before they ever get to a user.

Why Software Peer Review Is Your Team’s Superpower

Let's be real—shipping great software is tough. Deadlines are tight, requirements are a moving target, and complexity seems to grow by the day. In that environment, it's dangerously easy for small mistakes to slip through the cracks, only to blow up into major headaches once the code is live.

This is where a solid software peer review process stops being a "nice-to-have" and becomes a genuine superpower for high-performing engineering teams.

Many developers see code reviews as just another form of bug hunting—a tedious chore you have to get through before you can merge a pull request. But that view misses the forest for the trees. The real magic of peer review goes way beyond just catching typos and errors. It’s a foundational practice that builds a culture of shared ownership, constant learning, and collective responsibility for the health of the entire codebase.

Beyond a Simple Bug Hunt

Calling a peer review just a "bug hunt" is like saying a pilot's pre-flight checklist is just "looking for broken parts." Sure, that's part of it, but the deeper goal is to make sure the whole system is safe, efficient, and ready for its mission. In the same way, a modern review process isn't just about the new code; it's about making sure that new code integrates perfectly with everything that already exists.

The real purpose of a software peer review is to:

- Catch errors early: Find and fix bugs when they’re cheap and easy to solve—not after they’ve reached production.

- Spread knowledge: Tear down information silos by getting more eyes on different parts of the code. This ensures no single person is a bottleneck.

- Build a resilient codebase: Enforce consistent coding standards and architectural patterns that make the software easier to maintain and scale down the road.

A mature review process changes the team's mindset from "my code" to "our code." It shifts the focus from individual heroics to team success, creating an environment where feedback isn't criticism—it's a gift that makes everyone better.

Adopting a strong software peer review process is a huge step toward implementing comprehensive software risk solutions, baking quality and stability in from the very beginning. As we go on, we’ll dig into the tangible benefits, the different ways to do it, and the new wave of AI tools that are making this essential practice more powerful than ever.

Choosing the Right Review Method for Your Team

Picking a software peer review method isn’t about finding the one "best" way to do things. It's about finding the best fit for your team's culture, the specific demands of your project, and how fast you need to move. Think of it like choosing the right tool for a job—you wouldn't bring a sledgehammer to a task that needs a precision screwdriver.

The method you land on will directly shape your development cycle. A bad fit can create frustrating bottlenecks for developers and, ironically, fail to catch the very bugs it was meant to prevent. The trick is to match your review style to your main goal, whether that’s building mission-critical, bulletproof software or pushing out rapid, iterative updates.

Formal Inspections: Rigorous and Deliberate

At the most structured end of the spectrum, you have formal inspections. This is the courtroom proceeding of code review. It’s a heavyweight process with clearly defined roles (like a moderator, the author, and inspectors), strict preparation phases, and official meetings where the code is scrutinized line-by-line against a checklist.

This approach is incredibly thorough, which makes it perfect for systems where failure is simply not an option—think aerospace, medical devices, or the software processing financial transactions. But its slow, deliberate pace makes it a terrible fit for fast-moving agile teams where speed is everything.

Pair Programming: Collaborative and Real-Time

Somewhere in the middle, you’ll find pair programming. This is a highly collaborative, in-the-moment approach where two developers share one workstation. One person, the "driver," writes the code, while the other, the "navigator," watches, reviews, and offers immediate feedback on everything from overall strategy to potential typos.

This method is fantastic for spreading knowledge across the team and getting instant feedback, especially when you're onboarding new engineers or tackling a really complex problem. The review happens as the code is written, so there's no separate review step later. The obvious trade-off is that it ties up two developers on a single task, which might not be the most efficient way to handle simpler tickets.

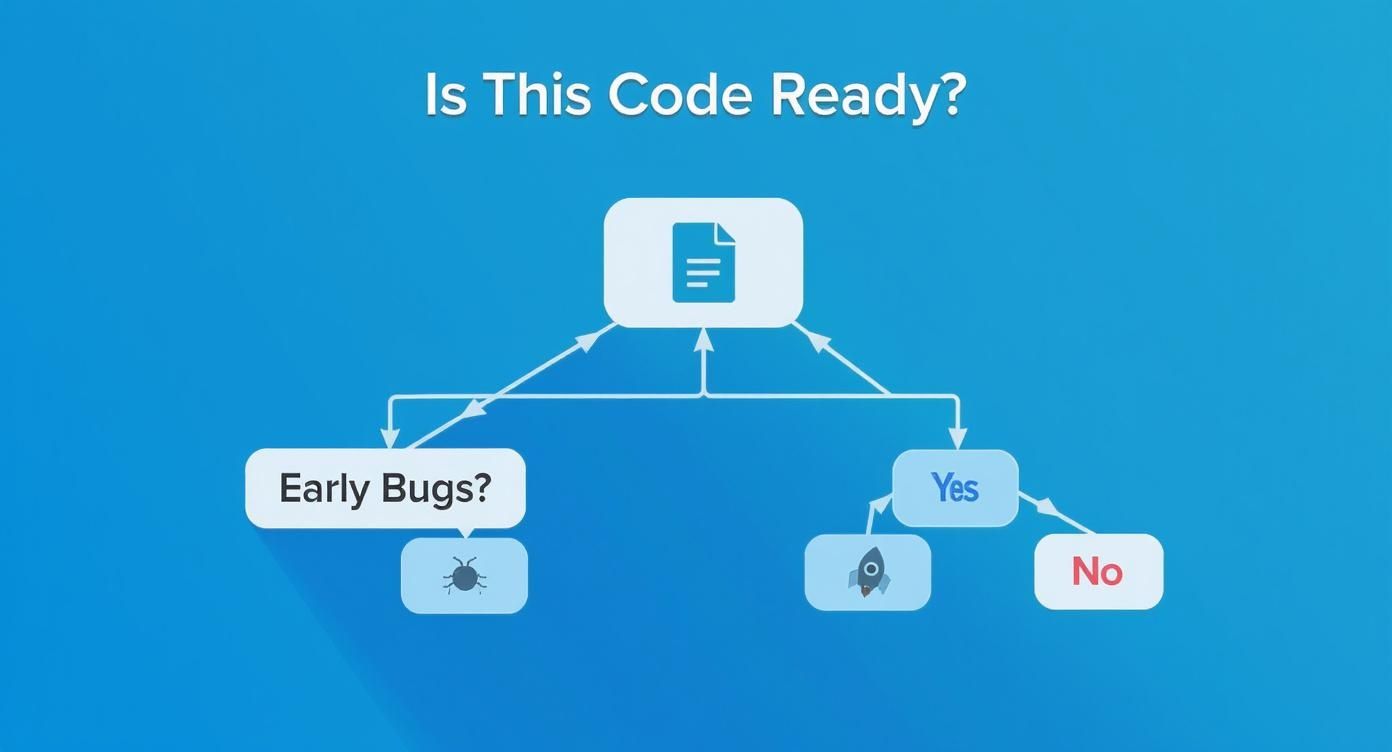

This decision tree helps visualize when code is ready to move forward or needs another look.

As the diagram shows, catching bugs early is a crucial checkpoint. It sends code back for refinement before it ever gets close to deployment.

Asynchronous Pull Requests: Flexible and Modern

By far the most common method today, especially for teams on GitHub or GitLab, is the asynchronous pull request (PR) review. A developer finishes their work on a separate branch and then submits a PR, asking teammates to look over the changes before they get merged into the main codebase.

This approach gives teams a ton of flexibility. Reviewers can jump in and provide feedback on their own schedule, and the whole conversation is documented right there in the PR for anyone to see later. It’s a model that works incredibly well for distributed teams and modern agile workflows.

The core strength of the pull request model is its balance between thoroughness and speed. It avoids the rigidity of formal inspections while providing a more documented and scalable process than pair programming.

The biggest pitfall? The asynchronous nature can lead to long delays if reviewers are slow to respond, turning the PR queue into a major bottleneck. Keeping PRs small and focused is the key to making this process hum. In fact, a study by Google found that smaller changes—typically under 200 lines—get faster and more thorough reviews.

Comparing Software Peer Review Methods

To help you figure out what might work best for your team, here’s a quick breakdown of the common review methods we’ve discussed.

| Review Method | Primary Goal | Process Style | Best For |

|---|---|---|---|

| Formal Inspection | Maximum defect detection and compliance | Highly structured, meeting-based, and offline | Safety-critical systems, government projects, and industries with strict regulatory requirements. |

| Pair Programming | Knowledge sharing and real-time feedback | Synchronous, collaborative, and hands-on | Complex problem-solving, onboarding new developers, and tackling high-risk features. |

| Pull Request Review | Balanced quality control and development speed | Asynchronous, tool-based, and documented | Most agile and DevOps teams, open-source projects, and distributed workforces. |

Ultimately, the right choice depends entirely on your context. A startup building a social media app will have very different review needs than a team developing software for a pacemaker.

The Real-World Benefits of a Strong Review Culture

A solid software peer review process does a lot more than just clean up code. It’s an investment that delivers real, measurable wins for your team's performance and your product’s success, paying off long after a feature goes live.

The most obvious win is a massive jump in code quality. It doesn’t matter how senior a developer is; everyone has blind spots. A second pair of eyes can catch logical errors, subtle bugs, and missed edge cases that the original author just can't see after staring at the same screen for hours.

Catching these issues early is everything. A bug found during a peer review is ridiculously cheaper and faster to fix than one that makes it to production, where it can cause customer headaches, corrupt data, or trigger costly downtime. This turns quality assurance from a last-minute checkpoint into something that’s baked into the development process from the start.

More Than Just a Bug Hunt

Beyond catching mistakes, a consistent review culture is one of the best mentoring tools you can have. When a senior dev reviews a junior engineer’s work, it’s a real-world learning moment. They can share insights on architectural patterns, performance tricks, and better ways to write code—lessons that stick way better than anything from a textbook.

This dynamic helps the whole team level up and smashes knowledge silos.

- Shared Ownership: When multiple people review a piece of code, they get familiar with it. Suddenly, the original author isn't the only one who knows how a critical feature works.

- Consistent Standards: Reviews are how you enforce your team's coding standards, naming conventions, and design principles. That consistency makes the entire codebase easier for everyone to navigate and maintain down the road.

A healthy review culture creates a feedback loop that elevates everyone. It builds a shared understanding of the codebase and reinforces the idea that quality is a collective responsibility, not just an individual one.

This collaborative approach isn't just a nice idea; it's becoming the standard. Industry data shows over 70% of organizations see better code quality and a whopping 50% drop in software bugs after setting up structured peer reviews. With agile and DevOps now the norm, over 70% of companies in the U.S. and Canada are using automated tools to keep up. For more stats, check out the peer code review software market report from Verified Market Research.

Boosting Security and Maintainability

Finally, a strong software peer review process is a critical security layer. When reviewers are trained to look for common vulnerabilities—like SQL injection, cross-site scripting (XSS), or sloppy data handling—they can stop security flaws before they ever see the light of day.

This proactive stance on security is far more effective than just relying on occasional penetration tests. It builds a security-first mindset right into the development team, making everyone responsible for shipping secure code. Over time, this leads to a more resilient application, cuts down on technical debt, and makes future work faster and less risky.

Building a Peer Review Process That Actually Works

Knowing the theory behind software peer review is one thing. Building a process that your team actually follows—and gets real value from—is a whole different ball game. Done wrong, it quickly becomes a bottleneck, a source of friction, or just a box-ticking exercise that nobody takes seriously.

The real goal is to create a system that’s both effective and efficient. You want to improve code quality without grinding development to a halt. This means being intentional about setting clear expectations, defining what’s in and out of scope, and most importantly, managing the human side of giving and getting feedback.

H3: Start With a Clear Purpose

Before anyone even looks at a line of code, the team needs to agree on what a review is actually for. Is the main goal to hunt for bugs? Enforce coding standards? Improve readability? Maybe it’s to validate a new architectural pattern.

A good review touches on all of these, but having a primary objective keeps feedback focused. This simple agreement helps prevent reviews from spiraling into pointless debates over stylistic preferences—a classic time-waster. When everyone is on the same mission, the feedback stays sharp and actionable.

H3: The Human Side of Peer Review

Let’s be honest: having your work scrutinized can feel vulnerable. A great software peer review culture acknowledges this and is built on a foundation of psychological safety. The focus has to stay on the code, never the person who wrote it.

The core principle is simple: be kind. Assume good intentions. The author is your teammate, and you're both working toward the same goal—shipping excellent software. Criticism should always be constructive, specific, and framed as a suggestion rather than a command.

Here are a few ground rules for healthy communication:

- Praise good work. See a clever solution or some exceptionally clean code? Say so! Positive reinforcement is just as vital as constructive feedback.

- Ask questions, don't make statements. Instead of saying, "This is inefficient," try, "Have you considered an alternative approach to optimize this loop?" This opens a dialogue instead of shutting it down.

- Explain your reasoning. Don’t just point out a flaw; explain why it's a problem. Link to team standards or official docs to give your suggestions weight.

By fostering a positive feedback loop, you turn the software peer review from a dreaded judgment into a collaborative learning moment for everyone.

H3: An Actionable Checklist for Effective Reviews

To bring some structure to the process, high-performing teams lean on a clear checklist. This creates consistency and helps both authors and reviewers focus on what truly matters. For an even deeper dive, check out our guide on the best practices for code review.

A checklist ensures nothing critical slips through the cracks. It sets clear expectations for what the author should prepare and what the reviewer should look for, making the entire exchange more productive.

Actionable Peer Review Checklist

| Checklist Item | Author Responsibility | Reviewer Focus |

|---|---|---|

| Clarity and Scope | Is the PR title and description clear? Keep changes focused on a single task. | Does the code do what the description says? Are there unrelated changes that should be in a separate PR? |

| Functionality | Did you manually test the code to confirm it works? Have you covered all the edge cases? | Does the logic solve the problem correctly? Does it introduce any new bugs or regressions? |

| Readability | Use clear variable/function names. Add comments where the logic is complex or non-obvious. | Is the code easy to understand? Can another developer maintain this code a year from now? |

| Consistency | Did you follow the team’s coding standards and style guides? | Does the code follow established patterns and conventions? Any "magic numbers" or hardcoded strings? |

| Testing | Did you write or update automated tests? Do all existing tests still pass? | Do the tests provide adequate coverage? Are the tests themselves well-written and reliable? |

Ultimately, a software peer review process that actually works is one that’s built for your team. It should feel less like a gatekeeper and more like a collaborative checkpoint that makes the entire team—and the codebase—stronger.

How AI Is Changing the Review Workflow

For decades, software peer review has been a purely human endeavor. It’s powerful, no doubt, but it’s also constrained by human limitations—time, focus, and even the biases we don't realize we have. Now, AI is stepping onto the scene, not to kick human reviewers out, but to give them a massive upgrade. It’s creating a review workflow that's faster, smarter, and way more effective.

Think of AI-powered tools as the ultimate coding assistant, one that never gets tired or bored. They automate the grunt work of code review, instantly flagging common bugs, sneaky security flaws, and violations of your team's style guide. These are the kinds of things a human reviewer might easily miss after a long day staring at a screen.

This shift frees up your team to focus on what people do best: debating high-level logic, questioning architectural choices, and judging the overall elegance of a solution. Instead of wasting brainpower on syntax nits, developers can finally have the meaningful, big-picture conversations that truly improve the code.

The goal here is simple: weave the review process directly into the act of coding, not treat it as a separate, painful chore that happens hours or days later.

This Isn’t Your Dad’s Linter

Modern AI tools go way beyond what a simple linter or static analysis tool can do. They are context-aware, learning from your entire codebase to give feedback that's actually intelligent.

- Pattern Analysis: AI can spot anti-patterns or clunky code structures that, while technically correct, are terrible for performance or a nightmare to maintain.

- Performance Suggestions: It can analyze code complexity on the fly and recommend more efficient algorithms or point out potential bottlenecks before they ever cause a problem.

- Security Vulnerability Detection: Trained on massive datasets of real-world exploits, AI models can detect subtle vulnerabilities that would otherwise slip right through.

- Context-Aware Feedback: This is the real game-changer. Unlike a dumb linter, an AI tool can often figure out the intent behind the code, offering suggestions that actually help a developer achieve their goal.

AI doesn't just check for broken rules; it understands context. It can see that one function needs to be screamingly fast and suggest optimizations, while recognizing that another handles sensitive data and flagging potential security risks.

The Real-World Impact

Let's be clear: this is fundamentally changing how peer reviews get done. Modern AI platforms are turning the traditional process on its head by analyzing code patterns, catching bugs before they're even committed, and suggesting optimizations on the fly. One study found that teams using an AI review system saw a 40% reduction in bugs hitting production and spent 60% less time on manual reviews. With a whopping 45% of organizations already adopting these tools, it's obvious where the industry is heading.

Tools like Kluster.ai are perfect examples. By integrating directly into a developer's IDE, Kluster provides instant feedback on AI-generated code, catching logic bombs, performance hogs, and security holes the moment they're written. This shrinks the feedback loop from days to minutes, letting teams merge pull requests at a speed that was previously unimaginable. You can check out a whole range of automated code review tools to see what's out there.

Of course, plugging AI into an established workflow isn't always a walk in the park. For any team looking to make the leap, successfully navigating AI implementation challenges is the first, crucial step. The future of software peer review is a partnership—a powerful blend of human insight and machine intelligence that lets teams ship higher-quality code, faster than ever before.

Navigating Common Peer Review Challenges

Even a slick software peer review process can hit some serious speed bumps. Think of it like a performance car—it needs the right fuel and regular tune-ups to keep running smoothly. Spotting the common problems early is the key to keeping your team’s workflow productive and, just as importantly, positive.

The number one complaint you'll hear? Reviews become a massive bottleneck. A pull request just sits there for days, completely blocking a feature while the developer who wrote it has already mentally moved on to five other things. This kind of slowdown is almost always a symptom of fuzzy expectations or monster-sized PRs that nobody wants to touch.

Then there's the wild inconsistency of the feedback itself. One reviewer drops a two-word "looks good," while another dives into a 30-comment saga about minor style preferences that don't really matter. This randomness makes the whole process feel like a lottery instead of a structured quality gate, leaving developers frustrated and confused.

Fixing Bottlenecks and Inconsistent Feedback

Breaking the logjam of slow, inconsistent reviews is often way simpler than it seems. It all comes down to creating clear agreements that the whole team buys into.

- Set a Response Time Goal: Agree on a reasonable window for a first look, like four business hours. This simple rule sets a clear expectation and stops PRs from collecting digital dust.

- Use a Checklist: Don't leave it to chance. A simple checklist ensures everyone is looking at the same things—functionality, readability, tests, security—and cuts out the noise from superficial feedback.

- Keep It Small: Make a team rule to keep PRs under a few hundred lines. Smaller chunks of code are way less intimidating, which means they get reviewed faster and more thoroughly.

Dealing with People Problems

Let's be honest, the trickiest part is always the human element. Even the most well-meaning feedback can feel like a personal attack, making people defensive and killing team morale. The goal is to build a culture where everyone sees feedback as a way to make the code better together, not as a judgment on the person who wrote it.

The most stubborn myth in our industry is that senior developers are somehow "too good" for code reviews. That's not just wrong, it's dangerous. Reviews on senior-level code are where deep architectural knowledge gets shared and the whole team gets aligned on the really complex stuff.

A little training on how to give good feedback can go a long way. Teach your team to always comment on the code, never the coder. A great trick is to frame suggestions as questions, not commands.

Instead of saying, "This is wrong," try something like, "Could you walk me through your thinking here? I was wondering if another approach might be simpler." That one little change turns a potential argument into a collaborative chat. You end up with better code and a stronger team.

Frequently Asked Questions About Software Peer Review

Even with a solid process, questions always come up when teams start doing code reviews or try to improve their existing workflow. Getting ahead of these common hurdles is the key to turning good intentions into a smooth, effective habit. Here are some of the most common things developers and managers ask.

How Much Code Should I Put in a Single Review?

The golden rule is to keep it small. Seriously. Best practice says to keep changes under 400 lines of code. That number isn't random—it's the sweet spot where a reviewer can actually understand the changes and give meaningful feedback without getting overwhelmed.

Think about it: a focused review of 200 lines will always beat a quick skim of 2,000 lines. When a pull request gets too big, reviewer fatigue kicks in. That's when important bugs and logic flaws get missed simply because the reviewer's brain is overloaded.

Who Is the Best Person to Review My Code?

There's no single "best" person. A great code review pulls from a mix of perspectives, so you want a combination of teammates who bring different strengths to the table.

- A domain expert: You need at least one developer who knows that specific part of the codebase inside and out. They're the ones who will catch the subtle, nuanced mistakes.

- An outsider: Grab a developer from another team. They bring fresh eyes and can easily spot confusing logic or assumptions that the core team might take for granted.

- A mentor/mentee pair: Pairing a senior and a junior developer is a fantastic way to spread knowledge. The senior ensures quality while the junior learns the ropes—it’s a win-win.

How Do We Do Reviews Without Slowing Everything Down?

This is the number one fear, but a good review process actually makes you faster in the long run by cutting down on future rework. The trick is to stop reviews from becoming a bottleneck in the first place.

The time you put into a software peer review isn't a cost—it's an investment. That upfront check pays for itself many times over by catching bugs and tech debt when they are cheapest and easiest to fix.

Start by setting clear team agreements on turnaround times, like a four-hour window for initial feedback. Use asynchronous tools so no one is blocked, and let automation handle the easy stuff like style checks. That frees up your human reviewers to focus on what really matters: the logic.

Stop shipping AI hallucinations and start merging with confidence. kluster.ai provides instant, in-IDE feedback on AI-generated code, catching logic errors, security flaws, and performance issues before they ever become a pull request. Book a demo or start free at https://kluster.ai.