From Minutes to Seconds: Fast, Automated Code Review

Most automated code review tools are so slow that you quit waiting. They were built for a world where waiting minutes for feedback was acceptable, but today that delay breaks your flow. By the time the results arrive, you have already context-switched and the review turns into background noise.

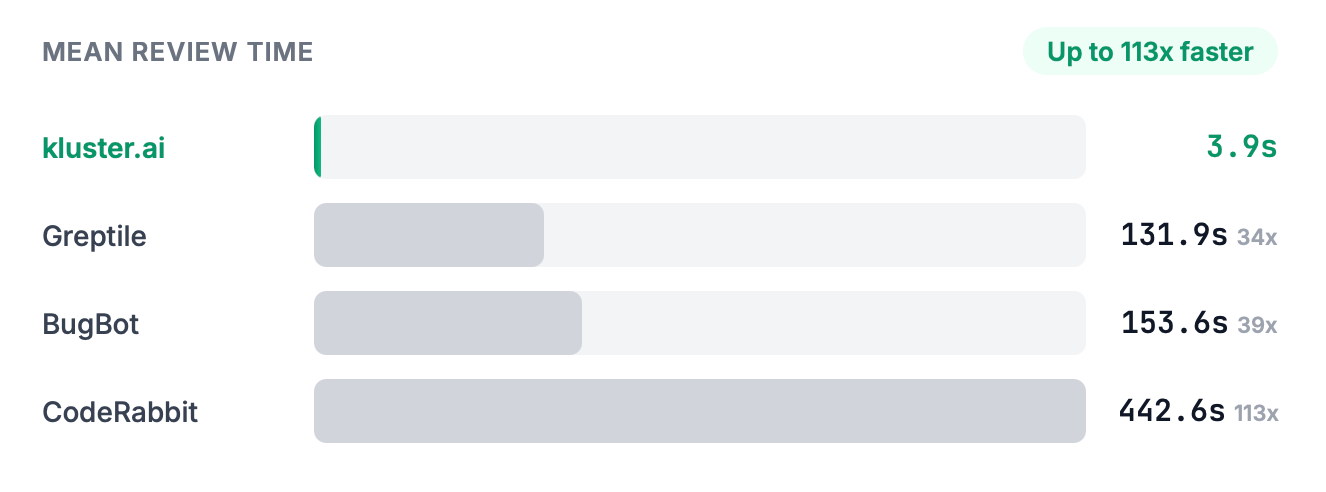

In our challenging realistic benchmarks, kluster.ai delivers reviews in a few seconds, up to 100x faster than other tools, while achieving accuracy comparable to the best of them. The difference is in the noise. kluster.ai has higher precision and far fewer false positives, so you spend more time fixing real issues and less time dismissing spurious alerts.

This post is about how we got there and how it works in your favourite IDE or coding agent today.

Why Speed Matters

When reviews take minutes, you use them selectively: maybe on important PRs, maybe before releases. When reviews take seconds, you use them on everything.

That shift changes behavior. Code review stops being a gate you pass through occasionally and becomes continuous feedback woven into how you work.

kluster.ai is fast enough to enable that shift. You can read more about the challenges of instant code review in a previous post of ours here.

What We Offer Today: Fast Reviews in the IDE

kluster.ai runs code reviews in about 5 seconds, fast enough for feedback on every change while you still remember what you were doing, and focused on issues that are important enough to act on.

Architecture for Fast, High Signal Reviews

Our production system is a highly optimized multi-agent architecture. Rather than sending code through a single large frontier model and waiting for a response, we decompose the review process into specialized stages that run in parallel.

Different agents handle different concerns: one focuses on security vulnerabilities, another on logic errors, another on code style, and so on. Each agent is tuned for its specific task and runs concurrently, collaborating where necessary.

For example, one of those agents tracks your dependencies against live vulnerability feeds. We cross reference your dependencies with CVE databases, so when a library in your stack picks up a critical issue, it shows up directly in the review instead of in a security bulletin days later. When the recent React2Shell vulnerability (CVE-2025-55182) in React Server Components was disclosed, kluster.ai was already flagging affected React versions in codebases and recommending the patched releases.

This approach lets us maintain high accuracy while dramatically reducing latency. The system is extensively evaluated on our own benchmarks, and we tune the system for high precision so that comments are more likely to be real issues than noise. The bottleneck shifts from a single slow pass through a large model to the slowest of several parallel paths, coordinated by an orchestrator that we have optimized aggressively. The result is comprehensive reviews that would take minutes with a naive approach, delivered in seconds.

Reviews in Action

In practice, kluster.ai runs where you write code: inside your IDE. Whether you trigger a review explicitly, let an AI agent call kluster.ai, or rely on ambient background reviews, the feedback appears directly in your editor.

To illustrate what our code reviews look like we provide a few examples.

Security: server-side request forgery (SSRF)

def fetch_avatar(user, http):

url = user.profile_picture_url

resp = http.get(url, timeout=2)

return resp.contentkluster.ai highlights the call and explains:

Issue: Possible SSRF via user controlled URL (High)

profile_picture_urlis user controlled and passed directly to an internal HTTP client. An attacker could use this to hit internal services or metadata endpoints.Fix: Restrict outbound requests to allowed hosts, or proxy user supplied URLs through a safe fetch service.

This is the kind of issue that can slip in during a refactor, and it is caught before it ships.

Performance: hidden extra network round trips

def get_active_users(api, user_ids):

return [

api.get_user(user_id)

for user_id in user_ids

if api.get_user(user_id).is_active

]kluster.ai points out the performance problem:

Issue: Duplicate API calls in a hot path (High)

get_useris called twice per user. In production this doubles the number of remote calls.Fix: Call

get_useronce per user, store the result, and reuse it in the condition and the returned list.

A small change, but one that matters in frequently called code paths or large batches.

Intent: violating a team specific rule

Your team has a rule to avoid logging raw email addresses. An AI suggestion adds this:

logger.info("New signup: %s", user.email)Without real-time review, this line might not get flagged until PR review, or it might slip through entirely. kluster.ai catches it immediately.

Issue: PII in logs (High, intent violation)

Team policy prohibits logging raw email addresses.

Fix: Log a hashed or redacted value, or use

safe_log_user(user)per project guidelines.

Security, performance, and project specific intent are just some examples of what we detect. Whether the code came from a human or an AI assistant, kluster.ai reviews it inside the IDE and returns clear, actionable feedback in seconds.

kluster.ai runs code reviews in a few seconds. That is fast enough for feedback on every change, while you still remember what you were doing, and it is what makes workflows like on demand, agent based, and ambient background review possible.

How It Shows Up In Your Workflow

Once reviews are fast enough, they do not have to be a separate step anymore.

Today kluster.ai can run in several ways, depending on how your team wants to work.

-

On-demand checks in the IDE

The base workflow is explicit. In your IDE you can ask kluster.ai to review the current file or your uncommitted changes. You press a button, and in less than five seconds the review appears in your editor. This is useful for bigger refactors, risky changes, or when you want a focused review before opening a pull request.

-

Reviewing code from AI agents

AI assistants generate code fast, but someone still has to check it. Without automated review, that someone is you: scanning for security holes, spotting logic errors, enforcing team conventions. Every issue you catch is time spent cleaning up after the AI.

kluster.ai reviews AI-generated code before you see it. If we find problems, that feedback goes back to the agent, which fixes its own mistakes. By the time the code reaches you, issues are already resolved. In effect, every change passes through the same reviewer, whether it came from a human or an AI. The difference is that for AI-generated code, you are not the first line of defense.

-

New: ambient background reviews

For teams that want zero friction, we recently introduced ambient background reviews. kluster.ai runs in the background of your IDE. You keep coding or accepting AI suggestions, and we scan recent changes asynchronously. When there is something important to say, a review appears in the editor a few seconds later. If there is nothing to say, nothing appears.

You do not need to press a button or watch a progress bar. It feels less like a separate tool and more like an always-on safety net. It moves code review from a pull request checkpoint to something that is continuously reviewing every change as you work. Most tools still wait for a PR. Ambient background review makes code review feel built into your workflow, not bolted on.

For the first time, code review feels as automatic as spellcheck.

Try It Free

kluster.ai brings code review down to seconds, not minutes, so you can safely review every change in your IDE. Turn it on once and get fast, accurate feedback on demand, in agent workflows, or in ambient background reviews.