Fixing AI Generated Code Issues

When we talk about AI-generated code issues, we're not just talking about typos or syntax errors. We're referring to a whole new class of problems—everything from critical security holes and sneaky logic flaws to code that’s just a nightmare to maintain down the line.

The Hidden Risks of AI Generated Code

AI coding assistants are incredibly fast. That speed is their main selling point, but it's also where the hidden costs start to pile up. Adopting these tools without the right guardrails is a lot like hiring a junior developer who works at lightning speed but lacks real-world experience. They'll produce code that seems to work, but it often misses the critical nuances of security, performance, and long-term quality.

This constant churn of fast, unvetted code leads to a dangerous accumulation of what we call "security debt." It’s a growing backlog of vulnerabilities and flaws that lie dormant in your codebase, just waiting for the worst possible moment to surface.

The immediate convenience is deceptive. Teams are pushing to ship features faster than ever, and it's easy to approve and merge code that looks fine on the surface. But underneath, you could be building on a foundation riddled with cracks.

The Scope of AI Code Vulnerabilities

Just how big is this problem? It's bigger than most teams think. The data from recent security analyses is pretty sobering.

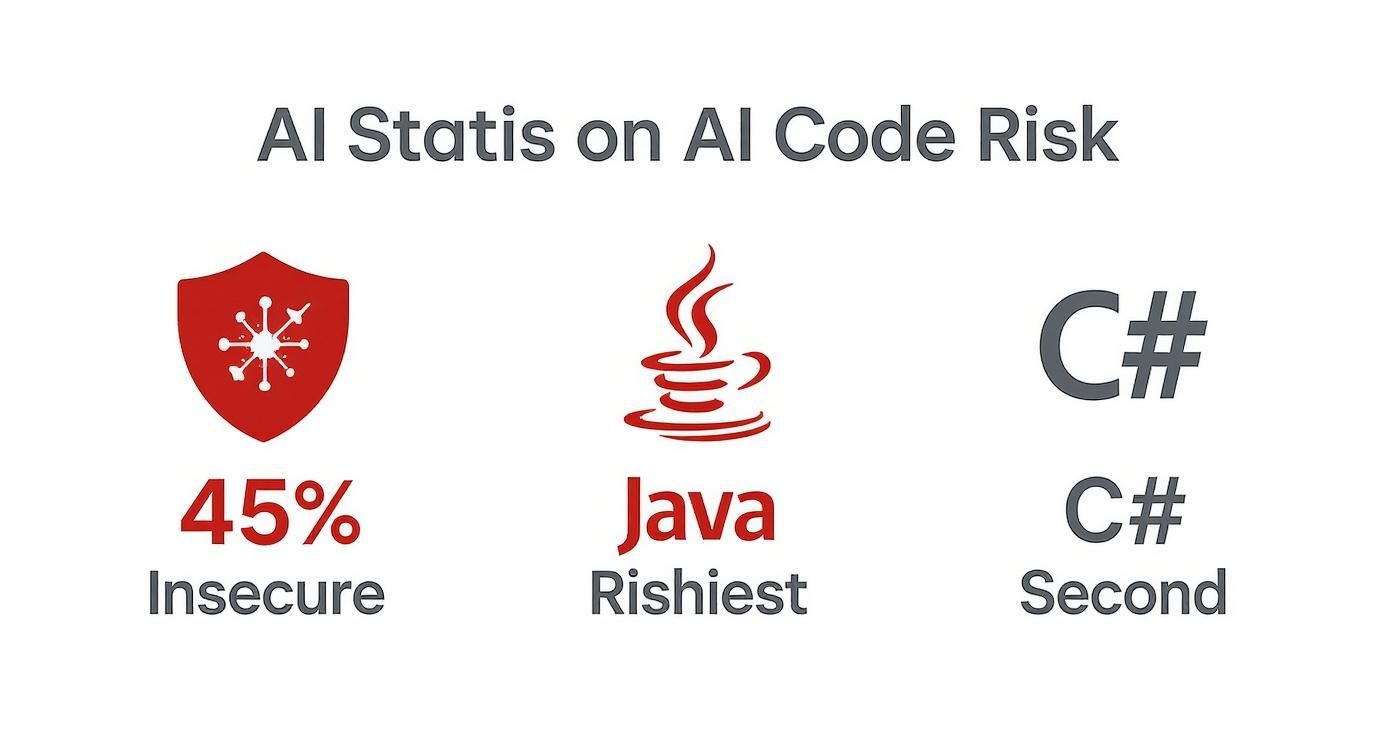

A landmark study from Veracode found that nearly half of all AI-generated code snippets contain significant security vulnerabilities. To be precise, a staggering 45% of code samples failed standard security scans.

The research, which dug into over 100 large language models, also found that some languages are riskier than others. Java was at the top of the list, with a failure rate over 70%, followed by C# at 45%. You can read the full research on these AI security risks to see the detailed breakdown.

This data isn't just a warning; it's a clear signal that we can't trust AI-generated code blindly.

This visualization really drives the point home: a huge chunk of the code our AI assistants produce is failing basic security checks, and the language you're using can dramatically change your risk profile.

Why This Matters for Your Team

These vulnerabilities aren't just abstract risks; they translate into real-world business problems. Every insecure line of code is a potential doorway for attackers, a source for data breaches, or a trigger for system crashes. The most common ai generated code issues tend to fall into three main buckets that every engineering team needs to get ahead of.

To give you a clearer picture, here’s a quick rundown of the most common issues we see.

Top AI Generated Code Issues at a Glance

| Issue Type | Common Example | Potential Business Impact |

|---|---|---|

| Critical Security Vulnerabilities | The AI generates code with a SQL injection flaw because it was trained on an old, insecure code example. | Data breach, regulatory fines (GDPR, CCPA), loss of customer trust, and significant financial damage. |

| Subtle Logic & Performance Bugs | An AI assistant creates an algorithm that works for small datasets but causes an N+1 query problem, slowing the application to a crawl under real-world load. | Poor user experience, application crashes, increased infrastructure costs, and damage to brand reputation. |

| Inconsistent Code Quality | The AI "hallucinates" a non-existent function or generates code that completely ignores the team's established style guide and lacks any documentation. | Increased technical debt, slower onboarding for new developers, and a codebase that's brittle and difficult to scale. |

Each of these issues, on its own, can create serious headaches. When they start piling up, they can cripple a project's momentum and introduce unacceptable levels of risk.

Let's break them down further:

-

Critical Security Vulnerabilities: This is the big one. AI models are notorious for replicating insecure patterns they learned from their vast training data. Think of the classics from the OWASP Top 10, like injection flaws or broken access control—AI assistants can serve those up in seconds.

-

Subtle Logic and Performance Bugs: These are the silent killers. It’s not just about security. AI can write inefficient algorithms, introduce subtle race conditions, or implement sloppy error handling. The result? Application crashes, frustrated users, and a system that can't scale.

-

Inconsistent Code Quality: This is a slow burn that kills productivity over time. AI-generated code often comes with zero documentation, deviates wildly from team style guides, and can even invent—or "hallucinate"—functions that don't exist. This makes the codebase a mess to navigate, maintain, and build upon.

Why AI Coding Assistants Create Flawed Code

To really get a handle on AI-generated code issues, you have to understand where they come from. It's easy to think of AI assistants as junior developers, but that’s not quite right. A better analogy? Imagine a student who has memorized millions of textbook pages but never actually learned the concepts behind them.

These Large Language Models (LLMs) aren't "thinking" or "reasoning" about what your code is supposed to do. They're incredibly sophisticated pattern-matching machines. They've been trained on a massive amount of public code from places like GitHub, which—let's be honest—is a minefield of outdated practices, subtle bugs, and straight-up security holes.

So when you ask an AI to write a function, it doesn't reason from first principles. It just predicts the most statistically likely sequence of code based on all the patterns it’s seen before. If a common pattern in its training data is insecure, the AI is probably going to spit that same flawed pattern right back at you.

The Problem of Insecure Training Data

The heart of the issue is that public code repositories are a total mixed bag. You've got brilliant, secure code sitting right next to deprecated libraries, vulnerable snippets from a decade ago, and code written by developers of every possible skill level. The AI learns from all of it, with no real way to tell best practices from dangerous anti-patterns.

This leads directly to flawed code. For example, if an AI has digested thousands of examples of code vulnerable to SQL injection, it might just generate a similarly weak database query for your app. It’s not being malicious; it's just repeating what it was taught.

This reliance on historical data creates a huge blind spot. One recent study found that a staggering 62% of AI-generated code solutions contain design flaws or known security vulnerabilities. The AI has no clue about your company's specific risk model or internal standards, making its output inherently untrustworthy without a second look.

"An AI tool can only resemble an intern with anterograde amnesia, which would be a bad kind of intern to have. For every new task this 'AI intern' resets back to square one without having learned a thing!"

This quote nails it. A human developer learns from feedback and gets better over time. Current AI models don't really retain context from one prompt to the next. Every request is a fresh start, which means the same mistakes can pop up again and again.

Lack of Application and Business Context

Beyond the messy training data, another major source of AI-generated code issues is a complete lack of context. The AI doesn't know your application's architecture, your business logic, or your company's unique security policies.

Think about these critical gaps:

- Organizational Policies: Your company probably has strict rules about data handling, dependency usage, or API authentication. The AI is oblivious to all of them.

- Architectural Nuances: It doesn't know which database you use, how your microservices talk to each other, or what your internal coding standards look like.

- The Intent Behind the Code: It can't grasp the "why" behind a feature. This leads to code that might work on a technical level but is completely wrong for the actual business need.

Without this context, the AI is basically coding in a vacuum. It might generate a perfectly standard user authentication function, but one that completely sidesteps your company’s custom single sign-on (SSO) integration. This problem isn't unique to code generation; you see similar issues with other AI-driven development tasks. For a broader perspective, consider the challenges of AI-generated test cases, which highlights just how much context matters.

At the end of the day, these models produce code based on probability, not comprehension. They're great for boilerplate and common algorithms but fall apart on the nuanced, context-heavy tasks that define robust and secure software. That gap between pattern matching and true understanding is exactly why human oversight and rigorous verification are still absolutely essential.

What AI-Generated Code Flaws Actually Look Like

To get a handle on the problems AI coding assistants create, you first need to know what you’re looking for. It’s like a mechanic diagnosing a weird engine noise—you listen for specific rattles and hums that point to the root cause. AI-generated code doesn’t just fail in one way; it breaks in several distinct and important categories.

We can break these flaws down into three main buckets. Think of this as a mental checklist to run through anytime you’re reviewing code that came from an AI. Getting these categories straight is the first step to building a solid defense.

Critical Security Vulnerabilities

This is the most dangerous stuff. These are the flaws that lead directly to data breaches, system takeovers, and the kind of damage that makes headlines. AI models learn from huge piles of public code on the internet, and unfortunately, a lot of that code is riddled with classic security mistakes. The AI just learns and repeats them.

The scale of this problem is hard to wrap your head around. One recent analysis estimated that AI assistants are pumping out over a billion lines of code every day. If you conservatively assume there's just one security flaw per 10,000 lines, that’s 100,000 new vulnerabilities being introduced daily. The same report found that roughly 40% of AI-generated applications had vulnerabilities. That’s not a fluke; it's a systemic issue. You can read more about these findings on AI-driven code risk.

And we're not talking about obscure, zero-day exploits here. We're talking about the greatest hits of security blunders that developers have been fighting for decades.

- Injection Flaws: The AI might spit out a database query that stitches user input directly into the string, creating a textbook SQL injection vulnerability waiting to be exploited.

- Broken Access Control: It could generate an API endpoint that completely forgets to check if the user actually has permission to see or change the data they're asking for.

- Insecure Deserialization: A model might suggest using a library to read data from an untrusted source without validating it first, opening a door for attackers to run their own code on your server.

The OWASP Top 10 is still the gold standard for understanding these risks, and you’ll find AI-generated code is a frequent offender of nearly every item on that list. Your review process has to be laser-focused on these high-impact vulnerabilities, because the AI certainly isn't.

Subtle Logic and Performance Bugs

Beyond the glaring security holes, AI assistants are absolute masters at creating subtle bugs. These are the sneaky ones that slip right through basic tests only to blow up in production when your application is under real-world stress.

These kinds of AI-generated code issues aren't about security—they’re about correctness and efficiency. The AI doesn’t truly understand the why behind your business logic, so it often produces code that works on the surface but is deeply flawed underneath.

You ask an AI to process a list of items. It gives you a simple solution with a nested loop—an O(n²) algorithm. It runs instantly with your test data of 10 items, but the moment it sees 10,000 items in production, the whole system grinds to a halt.

Here are a few common culprits in this category:

- Inefficient Algorithms: Just like the example above, the AI generates code with terrible time or space complexity that won't scale.

- Race Conditions: It writes code that lets multiple threads access the same data without any locks, leading to totally unpredictable behavior and corrupted data.

- Lousy Error Handling: The AI might generate code that just swallows exceptions or fails to consider edge cases, making your application brittle and a nightmare to debug later.

Quality and Maintainability Issues

The final category of flaws is all about the long game. This is the technical debt that AI can rack up at a terrifying pace, leaving you with a codebase that’s impossible to understand, modify, or build upon. Clean, maintainable code isn't a luxury; it's essential for any team that wants to keep moving fast.

This is the kind of flaw that gets overlooked because, technically, the code works. But it tramples all over your team’s established best practices and coding standards, making life miserable for the next developer who has to touch it.

Common maintainability headaches include:

- Inconsistent Style: The AI generates code that completely ignores your team’s formatting rules, naming conventions, and style guide.

- Zero Documentation: You get a block of code with no comments or docstrings, leaving everyone to guess what it does and why.

- "Hallucinated" API Calls: In one of the most frustrating failure modes, AI models will just invent function calls or library methods that don't exist, leading to build failures and pure confusion.

By keeping these three buckets in mind—Security, Logic, and Quality—you can approach AI-generated code with a structured, critical eye. It's the only way to catch the most common and damaging flaws before they make it into production.

How to Detect and Verify AI Generated Code

Knowing that AI generated code issues are everywhere is one thing. Catching them before they blow up in production is another game entirely. The single most important shift you can make is to stop treating AI-generated code as a finished product.

Instead, think of it as a first draft from an enthusiastic but very green junior developer. It looks plausible, but it absolutely needs a thorough review before it gets anywhere near your main branch. This means you need a multi-layered game plan, mixing smart automation with sharp human oversight. Relying on just one is a recipe for disaster.

Integrate Automated Security Scanning

Your first line of defense is always automation. By plugging security scanning tools directly into your development workflow, you create a safety net that catches the low-hanging fruit and common vulnerabilities before a developer even thinks about opening a pull request.

These tools aren't new, but they’ve become mission-critical with the flood of AI-generated code. They act as an automated quality gate, enforcing a baseline of security for every single code suggestion.

-

Static Application Security Testing (SAST): SAST tools are like a spell-checker for security flaws. They scan your raw source code before it’s even compiled, looking for well-known red flags like SQL injection patterns, hardcoded secrets, or sketchy, outdated libraries.

-

Dynamic Application Security Testing (DAST): DAST tools take the opposite approach. They test your application while it's actually running, poking and prodding it from the outside just like an attacker would. This is how you find vulnerabilities like cross-site scripting (XSS) or broken access controls that only show up in a live environment.

Putting a solid testing framework in place is non-negotiable for checking AI code quality. To really bulletproof your pipeline, you should explore some advanced automated testing strategies that give you much deeper coverage.

Adopt a Human-in-the-Loop Model

Automation is great, but it’s not magic. It can’t catch everything. Subtle logic bombs, missed business rules, and horribly inefficient algorithms will sail right past most scanners. This is where you need an experienced human to step in, guided by one simple, powerful principle.

Treat every piece of AI-generated code as if it were written by a brand-new junior developer. It must be reviewed, understood, and validated before being accepted.

This mindset changes everything. It turns the developer from a passive code-paster into an active, critical verifier. An experienced engineer can spot problems that an AI, with zero business context, could never even dream of. This adds a bit of friction, but as real-time code generation gets more complex, it's absolutely essential. If you want to go deeper on this, check out this post on why real-time AI code review is harder than you think.

Best Practices for Manual Review

A good manual review process isn't random; it's a structured hunt for the specific kinds of mistakes AI assistants are known to make.

Here’s a practical checklist your team can use every time they review AI code:

-

Verify the Logic: Forget syntax for a second. Does the code actually do what you asked it to do? AI models are notorious for "hallucinating" solutions or completely misinterpreting the prompt, leading to code that runs perfectly but is functionally wrong.

-

Check for Edge Cases: AI is great at coding the "happy path" but often trips over the weird stuff. Reviewers need to hammer on the code with null inputs, empty arrays, zero values, and any other boundary condition they can think of.

-

Assess Performance: Is the code efficient, or is it a ticking time bomb? Look for classic performance killers like nested loops over huge datasets (O(n²) complexity) or lazy database queries that will grind your application to a halt under real-world load.

-

Enforce Team Standards: The AI doesn't know your project's style guide, naming conventions, or architectural patterns. Human review is the only way to ensure the new code doesn’t stick out like a sore thumb and create a maintenance nightmare down the road.

By combining sharp automated scanning with this kind of disciplined, human-centric review, you build a powerful system for catching AI generated code issues. This dual approach lets your team move fast with AI without taking on all its baggage.

Building a Secure AI-Assisted Development Lifecycle

To get the most out of AI coding assistants without introducing a ton of risk, you need a strategy that wraps security around every single phase of development. Just letting your team use these tools without a plan is like handing out company credit cards with no spending limits—it's a recipe for disaster.

A secure, AI-assisted Software Development Lifecycle (SDLC) isn't about blocking AI. It’s about building smart guardrails that protect your codebase from common AI-generated code issues.

This means shifting security "left," embedding safeguards right into your developers' daily workflow. The goal is to create a layered defense that makes doing the secure thing the easiest thing. It starts with empowering your developers and ends with automated enforcement, ensuring that the speed you gain from AI doesn't come at the cost of security.

Empower Developers With Secure Prompting

Your first and best line of defense is the developer at the keyboard. The quality of the code an AI spits out is directly tied to the quality of the prompt that created it. Simply asking an AI to "write a function to handle user uploads" is just asking for insecure, boilerplate code.

Instead, your team needs to get good at secure prompt engineering. This means teaching developers to be incredibly specific with their requests, including security constraints right in the prompt itself. This practice nudges the AI toward generating safer code from the get-go.

For instance, a much better prompt would be:

"Create a Python function using the Flask framework to handle file uploads. Ensure it validates the file type to only allow PNG or JPG files, checks the file size to be under 5MB, and sanitizes the filename to prevent directory traversal attacks."

This level of detail dramatically cuts down the chances of the AI introducing a basic vulnerability. But remember, even the best prompts need verification. The AI can still make mistakes or just ignore your constraints.

Establish Clear AI Code Review Policies

Once the code is generated, the next critical checkpoint is the code review. Let's be honest, your existing review process probably isn't ready for the unique ways AI can fail. It’s time to establish a clear policy: treat AI-generated code with the same skepticism you'd apply to code from a brand-new junior developer.

This means a human needs to understand and validate every single line before it gets merged. Your code review checklist should be updated to specifically look for common AI flaws.

- Logic and Intent Validation: Did the code solve the actual problem, or just what the AI thought you asked for?

- Security Scrutiny: Actively hunt for OWASP Top 10 vulnerabilities like injection, broken access control, and insecure configurations.

- Performance Analysis: Check for sloppy, inefficient loops, unnecessary database calls, or other performance anti-patterns an AI might introduce.

Integrating powerful automated code review tools can seriously supercharge this process. They flag common issues automatically, freeing up your human reviewers to focus on the tricky business logic and architectural decisions.

Configure In-IDE Security Linters

The absolute best time to fix a bug is the second it’s written. By integrating security linters and real-time scanners directly into the Integrated Development Environment (IDE), you give developers instant feedback. This is miles more effective than waiting for a CI/CD pipeline to fail minutes or even hours later.

These in-IDE tools can flag potential security vulnerabilities as the code is being generated, offering suggestions and highlighting risky patterns on the fly. This creates a tight feedback loop that not only catches errors but also teaches developers secure coding practices in real time. This immediate verification is key to stopping flawed code from ever being committed in the first place.

Enforce Security Gates In CI/CD

Your final line of defense in a secure SDLC is the CI/CD pipeline. This is where you automatically enforce your non-negotiable security standards. By setting up security gates, you ensure that no code—whether written by a human or an AI—gets deployed to production if it fails to meet your organization's security baseline.

A quick checklist of recommended actions and tools can help visualize how to integrate these practices across the development lifecycle.

Secure AI Integration Checklist Across the SDLC

| SDLC Stage | Key Action | Recommended Tool or Practice |

|---|---|---|

| Planning & Design | Train developers on secure prompting techniques. | Internal workshops, secure coding guidelines. |

| Development | Use in-IDE security linters for real-time feedback. | Snyk Code, SonarLint. |

| Code Review | Mandate human review of all AI-generated code. | Manual review process, Kluster. |

| Testing (CI) | Run automated security scans on every commit. | SAST (e.g., Semgrep), DAST, dependency scanning. |

| Deployment (CD) | Implement security gates to block insecure merges. | CI/CD policy-as-code (e.g., OPA), secret scanning. |

By building this layered defense, from developer education all the way to automated pipeline enforcement, you create a resilient system. This approach allows your team to get all the productivity benefits of AI while actively managing and mitigating the inherent risks of AI-generated code issues.

Adopting AI in Your Workflow Responsibly

Let’s be honest: AI coding assistants are incredible, but they’re far from flawless. The future isn't about avoiding AI; it's about integrating it smartly. What good is saving five minutes generating a function if you spend five hours debugging a subtle security flaw it introduced in production?

Ignoring AI-generated code issues isn't a strategy—it's just accumulating security debt. Sooner or later, that bill comes due.

The smartest way forward is to treat every piece of AI-generated code as a first draft from a brilliant but dangerously naive intern. It looks plausible, but it absolutely requires a senior eye. This means building a healthy skepticism and solid verification processes into every single step of your workflow.

Fostering a Culture of Verification

Getting this right is more than a tooling problem; it's a culture shift. Your team has to move from blindly accepting AI suggestions to actively challenging and validating them. This "human-in-the-loop" approach isn't optional—it's the only way to build secure, reliable software with these new tools.

It’s about turning developers from passive code consumers into active supervisors of their AI assistants.

AI coding assistants are not a replacement for engineering expertise. They are a tool, and like any powerful tool, they must be used with skill, oversight, and a deep understanding of their limitations.

Your First Actionable Step

Building a secure, AI-powered workflow is a marathon, not a sprint. The best way to start is to pick one tangible practice from this guide and put it into motion today.

Don't overthink it. Just choose one:

- Update your code review policy to explicitly require manual verification for all AI-generated contributions.

- Integrate a security linter like Kluster into your IDE to catch issues in real-time.

- Hold a team workshop on secure prompt engineering to get better, safer code from the very beginning.

Taking one small, deliberate step is all it takes to start building the foundation for a more secure and efficient future.

Frequently Asked Questions

Got questions about dealing with AI-generated code? You're not alone. Here are some of the most common things development teams are asking as they navigate this new world.

Can I Just Ask AI to Write Secure Code?

You can, but you really shouldn't trust it blindly. Think of it like this: you can give a brand-new chef a complex recipe, and they might follow the steps perfectly. But they don't have the years of experience to taste the ingredients and know if something is off. They lack the intuition.

AI models are the same. They have no real context for your business. They don't know your specific security policies, your architectural quirks, or the unique threats you face. Even with the best prompt in the world, an AI can still introduce subtle flaws or miss something critical. A human developer's review is the only way to be sure.

What Is the Most Important Step to Prevent AI Vulnerabilities?

The single most important thing you can do is keep a "human-in-the-loop." Seriously. No tool, no process, nothing can replace the critical judgment of an experienced developer. Rigorous, manual code review is still your best defense against the weird and subtle bugs that AI can introduce.

Automation is great for catching the low-hanging fruit, but only a human can validate the business logic, sanity-check the performance, and confirm the code actually does what it's supposed to. A good rule of thumb? Treat every chunk of AI-generated code like it came from a brand-new junior dev. It needs that second set of eyes before it gets anywhere near your main branch.

Are Some Programming Languages Riskier for AI?

Yep, some languages seem to trip up AI more than others. Research has shown that languages like Java can produce a higher rate of security flaws in AI-generated code compared to something like Python or JavaScript.

But don't get too comfortable just because you're using a "safer" language. No language is immune to AI-generated code issues. The problem isn't the language itself; it's the AI's training data and its total lack of real-world context. Secure coding practices are universal and non-negotiable, no matter what your tech stack is.

How Do I Justify Investing in Security Tools for AI Code?

It's all about return on investment (ROI). The argument is simple: it is always, always cheaper to catch a vulnerability during development than to fix it in production.

Think about the cost of a developer spending a few minutes with a verification tool inside their IDE. Now, compare that to the cost of a data breach in production—we're talking emergency patching, brand damage, angry customers, and maybe even regulatory fines. Investing in tools that catch AI-generated code issues early isn't an expense; it's insurance against a much bigger, much more expensive disaster down the road.

Secure your development lifecycle by catching AI-generated errors the moment they happen. kluster.ai provides real-time, in-IDE code review, ensuring every line of AI-assisted code is verified against your team's standards before it ever becomes a problem. Start your free trial or book a demo with kluster.ai today.